It’s very easy to get in the habit of imagining the way that a query should work based on the Logical Order of query processing – the idea that the FROM clause gets evaluated first, followed by the WHERE clause, GROUP BY, and so on – finally ending with whatever is in the SELECT clause. We even get in the habit of creating indexes that focus on the WHERE clause, and this is mostly right.

But it’s only mostly right, and it will often depend on statistics.

There are other situations where statistics have to play a major part in choosing the right plan, of course. In fact, almost every query you ever run will use statistics to work out the best plan. What I’m going to show you in this post is an example of how the statistics end up being incredibly vital in choosing the right plan. It also helps demonstrate an important feature of the way that Scans work, and how to read execution plans.

I’m going to use AdventureWorks2012 for this example. I’m going to ask for the cheapest product according to the first letter of the product name. This kind of query:

|

1 2 3 |

SELECT MIN(ListPrice) FROM Production.Product WHERE Name LIKE 'H%'; |

Don’t run it yet. I want to ask you how you’d solve it on paper.

Would you prefer I give you a list of the products sorted by name, or would you prefer I give you that list sorted by price?

If you want the ‘sorted by name’ option, then you’ll have to look through all the products that start with H, and work out which is the cheapest (notice that my predicate is not an equality predicate – if I knew what the name had to be exactly, then I could have an index which ordered by name and then price, and very quickly find the cheapest with that name). This approach could be good if you don’t have many products starting with that particular letter. But if you have lots, then finding them all and then looking for the cheapest of them could feel like too much work. Funnily enough, this is the way that most people would imagine this query being run – applying the WHERE clause first, and applying the aggregate function after that.

On the other hand, if you have lots of products with that particular letter, you might be better off with your list sorted by price, looking through for the first product that starts with the right letter.

Let me explain this algorithm a little more.

If you’re at a restaurant and are strapped for cash, you might want to see what the cheapest thing is. You’d pick the “sorted by price” menu, and go to the first item. But then if you saw it had peanut in, and you have an allergy, then you’d skip it and go to the next one. You wouldn’t expect to have to look far to find one that doesn’t have peanut, and because you’ve got the “sorted by price” menu, you have the cheapest one that satisfies your condition after looking through just a few records.

It’s clearly not the same algorithm as finding all the things that satisfy the condition first, but it’s just as valid. If you’re only going to have to look through a handful of products before you find one that starts with the right letter, then great! But what if there are none? You’d end up having to look through the whole list before you realised.

The Query Optimizer faces the same dilemma, but luckily it might have statistics, so it should be able to know which will suit better.

Let’s create the two indexes – one sorted by Name, one sorted by Price. Both will include the other column, so that the query will only need one of them.

|

1 2 |

CREATE INDEX ixNamePrice ON Production.Product (Name) INCLUDE (ListPrice); CREATE INDEX ixPriceName ON Production.Product (ListPrice) INCLUDE (Name); |

Now let’s consider two queries. Both queries give the same result – $0.00. But that’s not important, I’m only interested in how they run.

|

1 2 3 4 5 6 7 |

SELECT MIN(ListPrice) FROM Production.Product WHERE Name LIKE 'I%'; SELECT MIN(ListPrice) FROM Production.Product WHERE Name LIKE 'H%'; |

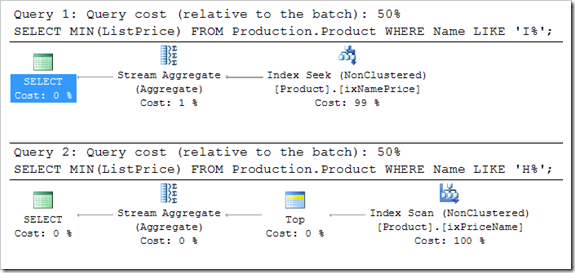

The two queries are almost identical, but they run quite differently.

Ok, they’re fairly similar – they both use a Stream Aggregate operator, for example. And they have similar cost. But significantly, one is performing a Seek, while the other is doing a Scan. Different indexes, but nevertheless a Scan and a Seek.

People will tell you that Scans are bad and Seeks are good, but it’s not necessarily the case. Here, we see that the Scan plan is no more expensive than the Seek plan – it’s just different. We should consider why.

Those two indexes are the two different stories that I described earlier. There are very few products that start with the letter ‘I’, and quite a number than start with ‘H’, and so the Query Optimizer has chosen differently.

There are exactly 10 products that start with I. From a total of 504. That’s less than 2% of the products.

There are 91 products that start with H. That’s 18%. You might not have expected it to be that high, but that’s okay – if SQL has been maintaining statistics for you on this, it hopefully won’t be as surprised as you.

18% – nearly 1 in 5. So by the time you’ve looked at, oh, a dozen records, you will have almost certainly found one that starts with an H. (Actually, the chance of NOT finding one in the first 12 would be power(.82, 12), which is 0.09. That’s just 9%.) If I do a bit of digging into the internals, I can discover that the pages in my index typically have over a hundred records on them each. The chance of not finding a product that starts with an H on that first page – you’d need lottery-scale luck (1 in 444 million).

On the other hand, the cost of finding the cheapest value from 91 records is a lot more expensive than finding the cheapest from just 10. And getting all 10 records should be a small number of reads too.

But a Scan! Really? It has to look through the whole table, right?

No. That’s not how it works.

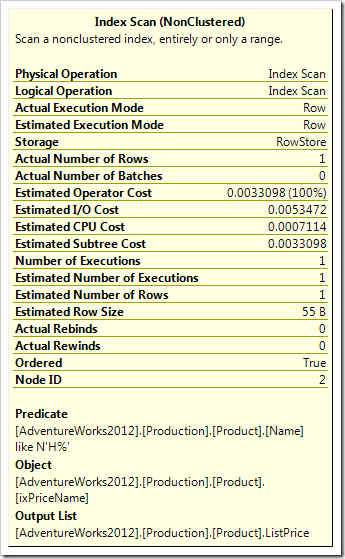

You see, execution plans go from left to right. If you start reading these plans from the right, you’ll start thinking that the whole index has been scanned, when it’s simply not the case. That Top operator asks for a single row from the index, and that’s all it provides. Once that row has been found, the Scan operation stops.

For this information, I don’t even need to pull up the Properties window for the Scan (but I would recommend you get in the habit of doing that). No – this is all available in the Tool Tip. Look at the number of “Actual number of rows” – it’s just one.

A predicate is applied – it looks through the index for rows that start with H – but it’s doing this in Order (see Ordered = True), and it’s stopping after the first row is found. Remember I mentioned that there are actually 91 rows that satisfy the predicate? The Scan doesn’t care – it only needs one and it stops right then.

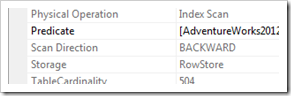

You might figure this is because we are using MIN. What if we needed the MAX though? Well, that’s just the same, except that the Direction of the Scan is BACKWARD (you’ll need F4 for that one).

MIN goes forward, because it’s most interested in the ‘smallest’ ones, MAX will go backward because it wants the ‘largest’. (And as you’d probably expect, if you’d created your index to be descending, then it would be reversed.)

But again – being able to tell which is the better algorithm depends entirely on your statistics being known.

I see so many systems have bad statistics for one reason or another, and typically because the data most frequently queried is the newest data, and that makes up such a small percentage of the table. The statistics will think that there is almost no data for ‘today’, as they probably haven’t been updated since at least some number of hours ago.

When you look at how a query is running, always have a think about you’d solve it on paper, and remember that you might actually have a better (or worse) picture of the statistics than what the Query Optimizer has.

And remember that a Scan is not necessarily bad. I might do another post on that soon as well.

This Post Has 12 Comments

In the seek version performance is much more predictable. It always has to perform the same work.

With the scan version the worst case scenario can be much more expensive than estimated even with perfect statistics due to the modelling assumption that the matching rows will be evenly distributed with respect to the other column. If in fact there is some correlation such that the matching rows for one column value all happen to be at one end of the range of values for the other column then a full scan is needed which can be much worse.

Example of that here

http://stackoverflow.com/questions/7481818/sql-why-is-select-count-mincol-maxcol-faster-then-select-mincol-max/7482342

Hi Martin,

There are also plenty of times when predicates with low selectivity cause a large number of rows to be returned by a Seek, so I would suggest your argument could work on both sides.

I would suggest it’s an example which suits making index choices based on business knowledge of the data model, if you are worried about the statistics being insufficient for good choices to be made.

Rob

Hi Rob,

I’m not saying that the scan plan is definitely worse (at least assuming perfect statistics) just that it can have more variable performance when the rows are not in fact exactly evenly distributed.

Say there are 1,000,000 rows. 1,000 match the seek predicate. Under the even distribution assumption SQL Server will assume that 1,000 (1 million / 1 thousand) rows need to be scanned.

For the seek plan the best, worst, and estimated case are all 1,000 rows

For the scan plan the best, worst, and estimated case are (1, 999,000, 1,000) and if the statistics are not perfect and in fact no rows match at all then the real worst case would be 1 million rows.

If the predicate is made less selective so 10,000 rows now match

For the seek plan the best, worst, and estimated case are all 10,000 rows

For the scan plan the best, worst, and estimated case are (1, 990,000, 100)

Just pointing it out as a potential issue to be considered.

In the real world there may be all sorts of correlations that don’t necessarily occur to one when writing queries.

SELECT MIN(OrderDate), MAX(OrderDate)

FROM Orders

WHERE ProductId = @ProductId

It is highly unlikely that productIds will be evenly distributed throughout the Orders table as new products get launched and old ones get discontinued for example.

Your points are all valid, and again I’ll say that designing indexes around business knowledge is important. Many business scenarios would consistently be much closer to the best case, and it would be foolish to settle for the "least bad worst case" alternative.

Obviously the queries can be forced into either plan, once the developers have considered their options.

And your query example doesn’t work. If you need both MIN and MAX you’d need to approach from both ends, and your equality predicate causes a simple composite index most effective.

Actually the link in my first post does show sometimes SQL Server will generate such a plan calculating the MIN and MAX separately (in that case it also has added lookups which make things worse.)

Great post! I really liked the clear explanations I think I shall use them in real life when trying to explain some of this behaviour to others (or just point them to this blog)

Martin: Yes – if it thinks the range is wide enough and low enough selectivity it can decide to go from both sides. But in that scenario you can solve it easily with a composite index, because the predicate is an equality.

Ian: Thanks

Having so few rows in the table make this a little contrite. The indexes only have 4 leaf pages to read. All data is found on one page and so its a question in both of scanning a single page for a value.

It would be helpful to show this with more data where the impact is significant.

Ok Simon… how about in AdventureWorksDW, which has 60K records in dbo.FactInternetSales? I get it’s still not huge, but it shows that it’s easy to have a bad plan come out.

CREATE INDEX ix1 ON dbo.FactInternetSales(OrderDateKey) INCLUDE (UnitPrice);

CREATE INDEX ix2 ON dbo.FactInternetSales(UnitPrice) INCLUDE (OrderDateKey);

SELECT MIN(OrderDateKey)

FROM dbo.FactInternetSales

WHERE UnitPrice between 0 and 100;

–Prefers ix1. 20 reads

SELECT MIN(OrderDateKey)

FROM dbo.FactInternetSales

WHERE UnitPrice between 600 and 700;

–Prefers ix2. 2 reads.

SELECT MIN(OrderDateKey)

FROM dbo.FactInternetSales WITH (INDEX(ix2))

WHERE UnitPrice between 0 and 100;

–Forced ix2. 150 reads.

Of course, with a correlation between the two fields, it could be possible to show an example of ix1 being particularly nasty as well.

Rob,

You may be alluding to what the optimizer team refer to as the ascending key problem, whereby data gets added to a table in ascending key order, for large tables ( opposite of the ‘Small’ table you mentioned – I know ), this can be mitigated against via trace flag 2371. You may also want to look at connect item 676224, another popular database engine includes the ability for hints to be used on statements that specify:

1. That data in the relevant table(s) should be sampled in order to produce better plans.

2. How aggresively the sampling should be

Refer to connect item 676224