CONVERT_IMPLICIT isn’t the only problem with getting data types wrong. You might have the right type, but what if the length is wrong? This post will look at both getting the type wrong and getting the length wrong too.

Let’s do some testing. We’ll need a table with indexes. I’d normally use one of the AdventureWorks versions for this, but as they have a tendency to use user-defined types (which I’m not actually a fan of), I’m going to create my own. Also, my example needs to leverage a composite index. I’m only putting a single row in the table, because the amount of data isn’t relevant to what I’m going to show. I’m using a Windows collation, which is the default on my machine. I’ll put a note in later to mention why.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

create table dbo.StringLength ( pk int primary key, id int, v50 varchar(50) collate Latin1_General_CI_AS, vmax varchar(max) collate Latin1_General_CI_AS, n50 nvarchar(50) collate Latin1_General_CI_AS, nmax nvarchar(max) collate Latin1_General_CI_AS ); go create index ix_v50 on dbo.StringLength (v50, id); create index ix_n50 on dbo.StringLength (n50, id); go insert dbo.StringLength (pk, id, v50, vmax, n50, nmax) values (1, 1, 'abcdefghij', 'abcdefghij', N'abcdefghij', N'abcdefghij'); go |

I haven’t indexed the vmax and nmax fields, because you can’t use them as index keys. Of course, there’s plenty of argument to having those fields in your actual tables, but if you’re wanting to be able to search on that data, you might want to consider a full-text index. If the searching is always on the start of the string, you could consider another option, but we’ll come to that later.

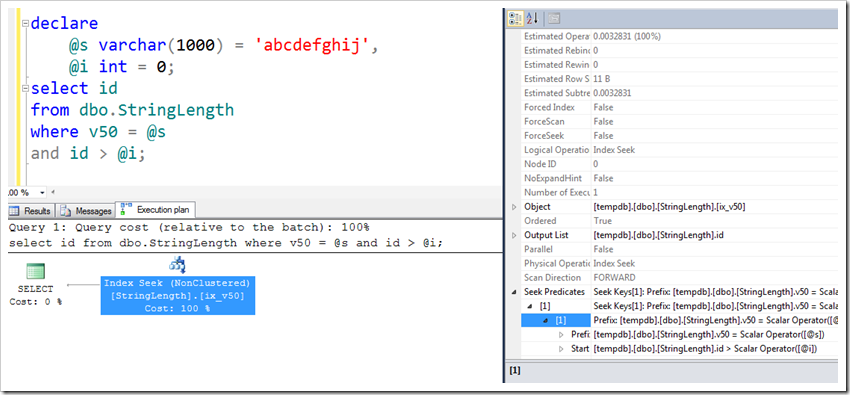

Let’s look at what happens when we do it right, defining variables using the right type.

|

1 2 3 4 5 6 7 |

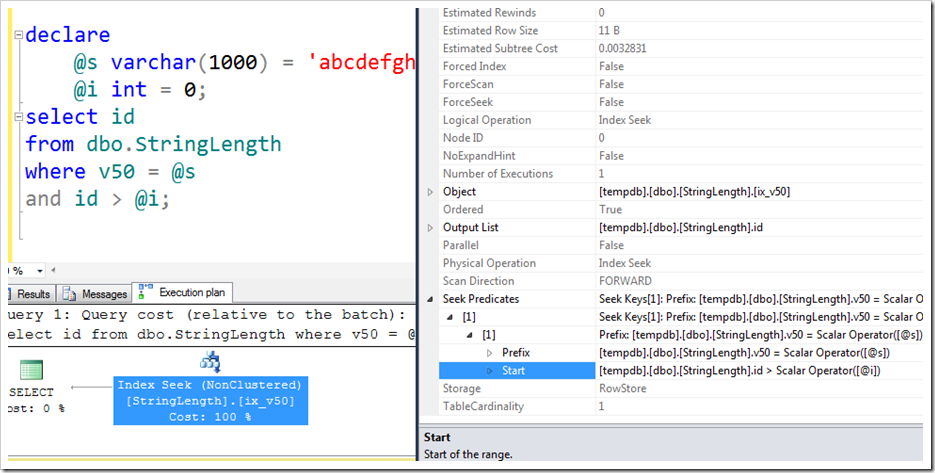

declare @s varchar(1000) = 'abcdefghij', @i int = 0; select id from dbo.StringLength where v50 = @s and id > @i; |

Notice that there is no Predicate property of the Index Seek, only a Seek Predicate, which has both a Prefix and Start. The range of rows returned by the Seek Predicate has a start-point based on a combination of the Prefix and Start, and an end-point which is the end of the Prefix.

If the wrong types are used, we see that a conversion is needed.

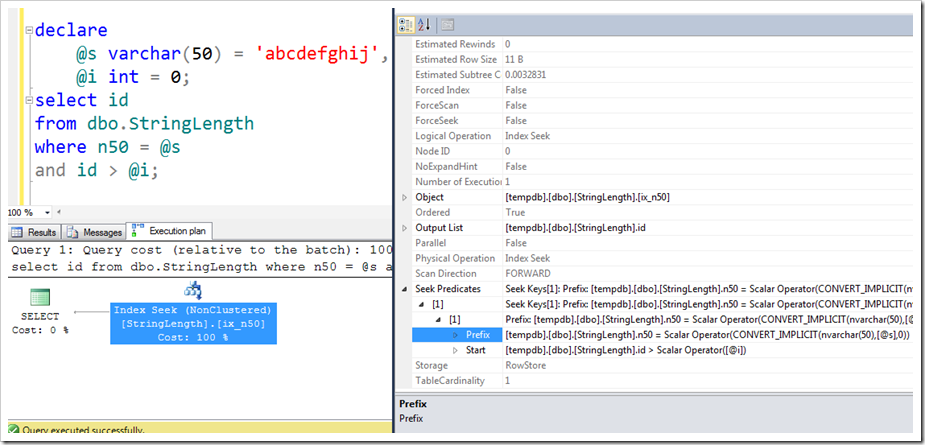

Let’s start by using a varchar(50) parameter, and comparing it to the nvarchar(50) column.

We still see no Predicate here, but look at the Prefix. A CONVERT_IMPLICIT is needed because the types don’t match. In case you haven’t heard, this is bad.

But how bad is it? Actually, not very bad at all, because @s is converted into the correct type, and then used in the Seek. You should still avoid it by passing in the correct type, but the cost of converting a parameter to the correct type is not that bad, because it only needs to happen once.

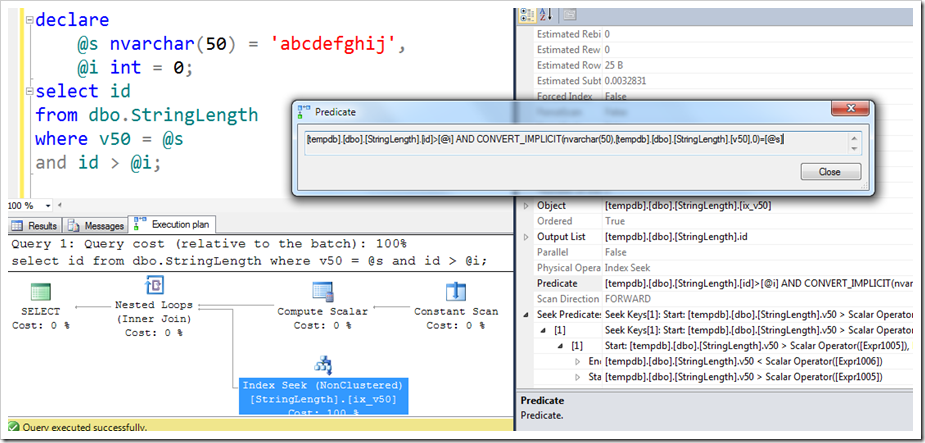

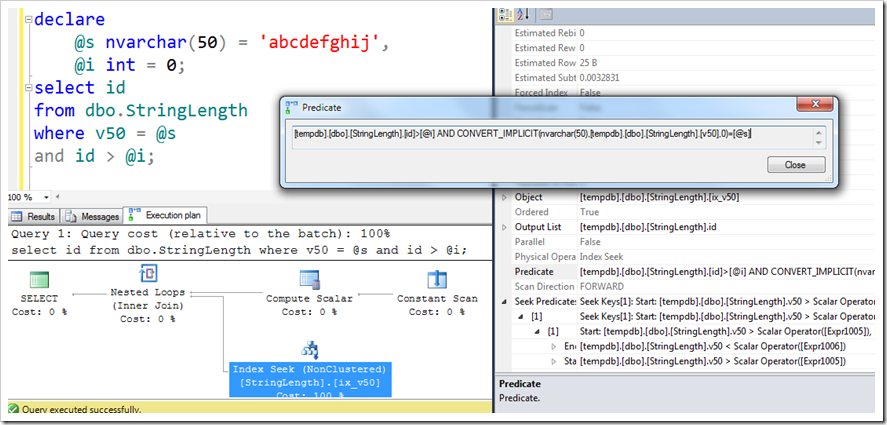

What happens if we do it the other way around, passing in an nvarchar(50) parameter and comparing it to the varchar(50) column.

Oh!

Straight away, you’ll notice that there’s a different shape to the execution plan, we’ve lost the Prefix in the Seek Predicate, and we have a Predicate (the residual one) property as well. But we do still have an Index Seek. It hasn’t resorted to using an Index Scan as would’ve been the case if we had used a number.

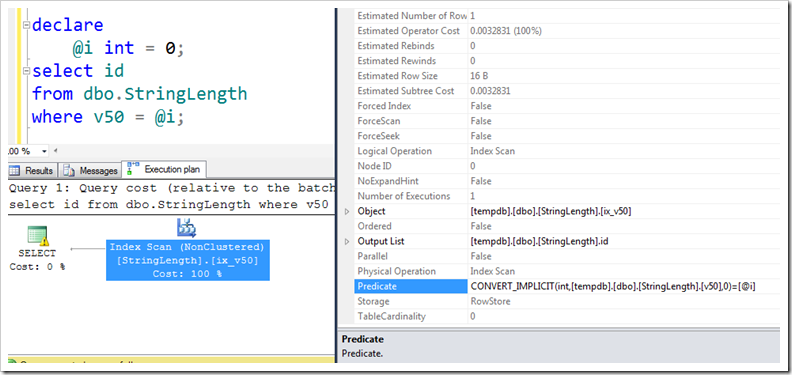

(Just for completeness, let’s truncate the table – to avoid a conversion error – and use a number for the comparison)

Here we get an Index Scan. No Seek Predicate. The index on v50 is as good as useless because we’re comparing the column to a number. Look what’s going on in the Predicate – we’re converting the v50 field into an integer, and seeing if it’s equal to @i. That’s doing it for every row in the index.

Luckily, we now get a warning about this. See the yellow triangle with an exclamation mark in it on the SELECT operator? If I click on that, I see a warning that says:

Type conversion in expression (CONVERT_IMPLICIT(int,[tempdb].[dbo].[StringLength].[v50],0)) may affect "CardinalityEstimate" in query plan choice, Type conversion in expression (CONVERT_IMPLICIT(int,[tempdb].[dbo].[StringLength].[v50],0)=[@i]) may affect "SeekPlan" in query plan choice

It’s actually two warnings. One is a SeekPlan warning, and one is a CardinalityEstimate warning. It’s the SeekPlan one that has caused the Scan, while the CardinalityEstimate problem means the Query Optimizer has little idea about how many rows to expect out of the Scan operator. (And no, there’s no full stop / period at the end of those warnings. Go figure…)

Anyway, that was just an aside, because I figure there are plenty of posts already out there about this CONVERT_IMPLICIT issue leading to a Scan instead of a Seek. Let’s go back to our situation, where we were dealing with nvarchar and varchar strings, and still had a Seek. This one:

Clearly this is a different situation to a regular CONVERT_IMPLICIT. It’s less harmful, although there is still a big impact, and it’s potentially much more commonplace, as people don’t tend to care quite as much if they see an Index Seek already in play.

Oh, and this behaviour doesn’t happen with SQL collations. If you have a SQL collation, the sort order between varchar and nvarchar is different, and it has to do a Scan, just like when I used a number.

The thing that’s happening here is the same as when you have a datetime column that you’re casting to a date, or when you’re using a LIKE comparison with fixed start. The Query Optimizer uses a function called GetRangeThroughConvert (in the Compute Scalar – you can see it in the XML), which is then used to create a Dynamic Seek. I’ve presented on this before, such as in the SARGability and Residualiciousness talks at SQLBits in 2010 and the PASS Summit in 2011. Paul White (@sql_kiwi) expanded on the GetRangeThroughConvert function in a post from 2012. The seek can’t guarantee to catch everything though, so it uses a Predicate (the residual one) to make sure the value matches exactly.

So why is this so bad? In my presentations I’ve talked about the GetRangeThroughConvert behaviour as being a good thing. More on that first down in the post.

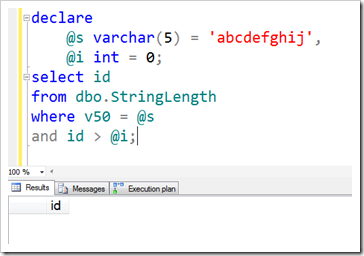

What if we pass in the correct type, but make it too long or too short?

When it’s too long (though we haven’t considered ‘max’ yet), we get the same behaviour as if it were the right length. Interestingly, if you search the XML version of this plan for either 1000 or 50 (except for in the column name), you don’t find it anywhere. It’s as if we passed in the correct value. The same happens if you pass in a string that is too short, but here you need to consider whether you might be wrecking the parameter.

In this situation, my query didn’t return the same results, because @s is only ‘abcde’. But it does this without any kind of warning – you can populate varchar(5) variable with a longer string and it won’t complain at all.

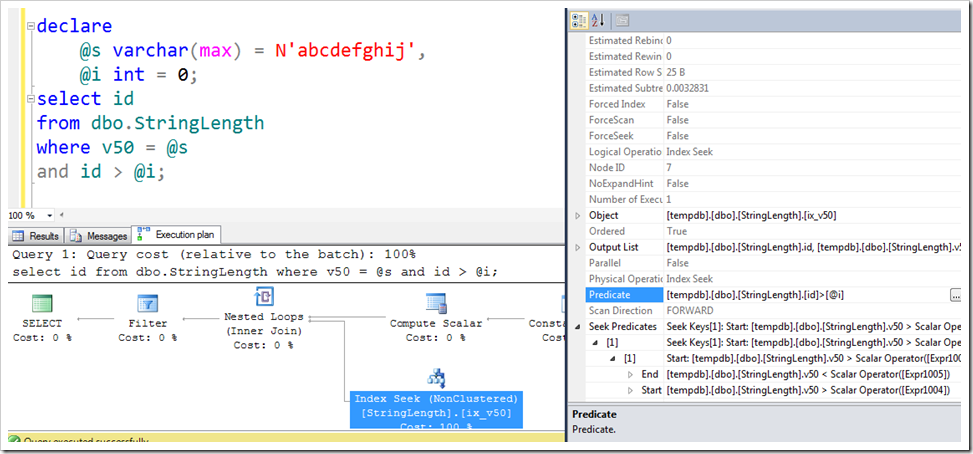

But max is done differently.

Let’s see what happens when we pass in a varchar(max) or nvarchar(max) parameter, and compare it to the limited-length string.

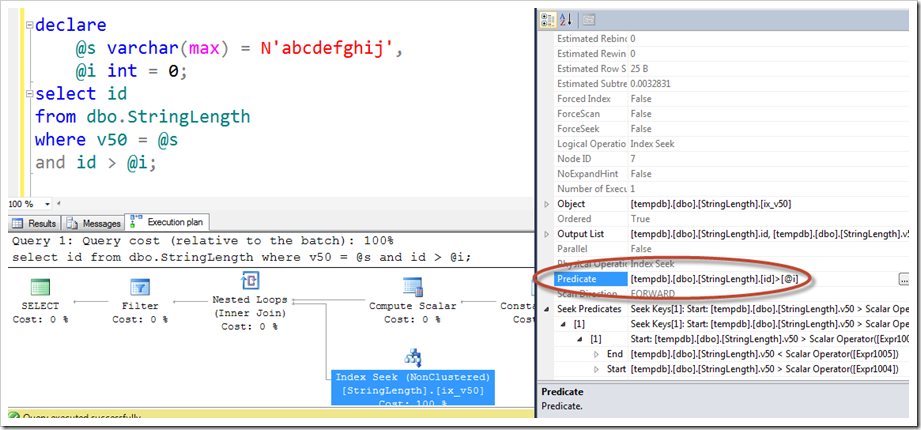

We’re comparing the varchar column to a varchar parameter, but the parameter is defined as a max field, and we have the GetRangeThroughConvert functionality, like what happened with the nvarchar / varchar scenario. But it’s more complicated again – despite the fact that we have a Range, our Residual Predicate doesn’t include the equality check. That check has actually been pulled further left in the plan, in that new Filter operator you see there.

You see, checking a max type is expensive, and involves memory allocation (that parameter is potentially up to 2GB in size), so the Filter is moved to the left as far as possible (SQL won’t ever do a max comparison in a Seek/Scan operator because of the memory allocation). By calling all the other filters (however the filters are done, Joins, Seeks, Residuals) before applying the max filter, the data that gets pulled into the max filter is now as few rows as possible. The Seek will be close to the correct amount, thanks to the GetRangeThroughConvert part, but that final check does still need to take place. It’s a good thing that the check is pulled left, but you should avoid passing in a max parameter so that this check can be done in the Seek Predicate.

So what about the other way around? What if we have a max column, and a limited-length parameter?

In some ways, this is more trivial because you can’t index a max column. This is one of the things that carried over from the days of text and ntext, although you couldn’t define a variable as text or ntext either, so you were less likely to try.

If you can’t index the column, but still want to be able to perform searches that would be index-like, what can you do (apart from using an Full-Text Index)?

Well, you could use a computed column that matches the length of your parameter. The non-clustered index persists the value, although it’s not persisted in the underlying heap / clustered index.

|

1 2 3 4 |

alter table dbo.StringLength add vmax50 as cast(vmax as varchar(50)); go create index ix_vmax50 on dbo.StringLength(vmax50, id) include (vmax); |

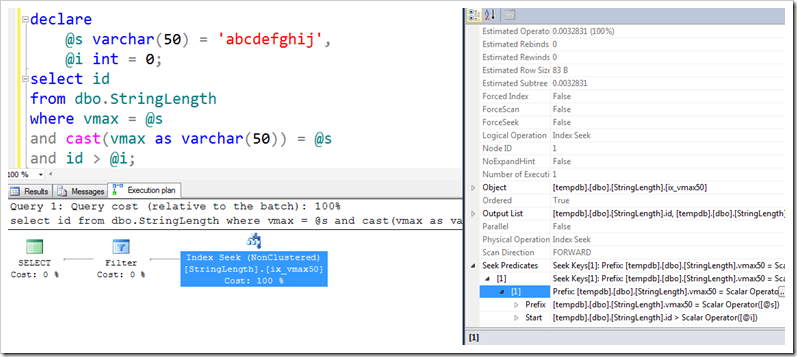

This is similar to what can be done to tune many queries to get around SARGability problems. But it won’t help here unless we change our query, because our query still uses the max column, and implied conversion makes the shorter one longer, not the other way around. However, if we add an extra predicate to our query, we can achieve what we want:

The Filter here is still the max check, but now we at least have a more effective seek on the rest of it, thanks to making a column which contains the first part of the potentially-long string.

So you can see that GetRangeThroughConvert functionality is useful, and way better than the alternative of using a Scan.

But GetRangeThroughConvert is actually bad for your query. Honestly.

The reason why it’s bad is because of what has happened to the second predicate that we’re using, the “id > @i” bit. When the type matched properly, it was part of the Seek Predicate. When GetRangeThroughConvert is used, either through the wrong type or by passing in the right type but with the max length, this second predicate gets relegated to the Residual (see the image below). That means that every row that satisfies the string comparison much be checked against this second predicate. It’s like using the phone book and not appreciating that all the “Farley”s are sorted by first name. Even without a second predicate, there may be problems in the plan because of unnecessary sorts, or the avoidance of a Merge Join, simply because the data cannot leverage the second column in an index (or the CIX key if there are no more columns in the key). If you’re looking for Barry Smith in the phone book, but are passing in ‘Smith’ via a varchar(max) parameter, you’ll be scanning all the Smiths looking for Barry. If you’d passed it in using varchar(100), then you could’ve found all the Barry Smiths with your Seek.

It won’t give you a warning, but you’ll find your indexes aren’t being used as effectively as you might like.

This Post Has 3 Comments

The last example would have benefited from a skip scan (https://connect.microsoft.com/SQLServer/feedback/details/695044/implement-index-skip-scan). I see opportunities for skip scans all the time, not just with "filthy" type conversion.

Hi Tobi,

Skip Scans would indeed be very useful.

Rob

Pingback: Beware the width of the covering range - LobsterPot Blogs