There are quite a few reasons why an Index Scan is better than an Index Seek in the world of SQL Server. And yet we see lots of advice saying that Scans are bad and Seeks are good.

Let’s explore why.

Michael Swart (@MJSwart) is hosting T-SQL Tuesday this month, and wants people to argue against a popular opinion. Those who know me and have heard my present would realise that I often argue for things that are somewhat unconventional, and that I have good reason for doing so. (For example, in my Advanced T-SQL course, I teach people how to write GROUP BY statements. Because most people do it wrong most of the time.)

So today I’m going to look at some of what’s going on with Scans and Seeks, and will demonstrate why the Seek operator is the one that has more to do.

I’m not going to suggest that all your execution plans will be better if all the Seeks are replaced by Scans of those same indexes. That’s simply not the case. But the advice that you always hear is a generalisation. Some Seeks are better than some Scans, and some Scans are better than some Seeks. But best of all of them is a particular Scan, and hopefully this post will go some way to convincing you of that, and demonstrate ways that you can help your queries take advantage of this technique.

From the user’s perspective, the big thing with Seeks is that the database engine can go straight to the required data for a particular query, whereas Scans search through the whole table for the data that’s needed. This is fairly true, and certainly, if it were the whole story, then it would be very hard to argue against Seeks. After all – if we can go straight to the required data, then that’s perfect! Hopefully you’re already thinking that it does sound too good to be true, and yet this is what we’re taught about Seeks.

An index uses a tree-structure to store its data in a searchable format, with a root node at the ‘top’. The data itself is stored in an ordered list of pages at the ‘leaf level’ of the tree, with copies of the ‘key data’ in levels above. The ‘key data’ is anything that’s defined in the index key, plus enough extra data to make sure that each row is uniquely identifiable (if the index is a ‘unique index’, then it already has enough information, if not, then the clustered index key(s) are included – with uniquifier column if the CIX keys are not unique), and therefore searchable. This means that the data can be found quite quickly, but it still requires some searching. It’s not like we have the file, pageid and slot number ahead of time. Then we really could go straight to the data we needed, which is what happens when we do a RID Lookup against a heap. We might find that this address stores nothing more than a forwarding record to another RID, but still we’re getting to the data very quickly. With an Index Seek, or even a Key Lookup, we need to find the data by searching for it through the levels of the tree.

I’ll also point out that a Seek takes two forms: Singleton and RangeScan, depending on whether the systems knows that we’re looking for at most one record, or whether we’re looking for multiple records. The singleton form is only used when the system already has sufficient data to identify a unique record. If there is any chance that a second record could match, then a RangeScan is performed instead. For the sake of the post, let’s consider the singleton form a special case of the RangeScan form, because they both dive in to the index the same way, it’s just that the singleton only dives down, rather than looking around once there.

So the Seek operation works out that it can use the index to find some rows that satisfy a predicate – some condition in an ON, WHERE or HAVING clause. It works out a predicate that indicates the start of the range, and then looks for that row. The database engine starts at the top of the tree, at the root node, and checks the index key entries there to find out which row to go to at the next level down, where it repeats the operation, eventually reaching the leaf level, where it can find the start of the range. It then traverses the leaf pages of the index, until it reaches the end of the range – a condition which must be checked against each row it finds along the way.

A Scan simply starts at the first page of the index and starts looking. Clearly, if only some of the rows are of interest, those rows might not be all clumped together (as they would be in an index on a useful key), but if they are, then a Seek would’ve been faster for the same operation, but there’s important part here:

Seeks are only faster when the index is not ideal.

Seeks are able to locate the data of interest in a less-than-perfect index more quickly than simply starting at the first page and traversing through.

But that search takes effort, both at the start, and on each record that must be checked in the RangeScan. I’m not just talking about any residual predicates that need to be applied – it needs to check each row to see if it’s found the end of the range. Granted, these checks are probably very quick, but it’s still work.

What’s more, a Seek hides information more than a Scan.

When you’re troubleshooting, and you look at a Scan operator, you can see what’s going on. You might not be able to see how many rows have actually considered (ie, filtered using the Predicate) before returning the handful that you’ve asked for (particularly if the scan doesn’t run to completion), but other than that, it’s pretty simple. A Seek still has this (residual) Predicate property, but also has a Seek Predicate that shows finds the extents of the RangeScans – and we have no idea how big they are. At least with a Scan we can look in sys.partitions to see how many rows are in there.

Wait – RangeScans? Plural?

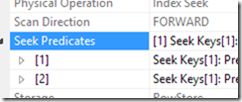

Yes. The execution plan does tell you that there are multiple RangeScans, if you look at the properties of the Seek operator. Obviously not in ‘Number of Executions’, or in ‘Actual’ anything. But in the Seek Predicates property. If you expand it. And count how many (at leas they’re numbered) entries there are. Each of these entries indicates another RangeScan. Each with its own cost.

And it’s not about the ‘Tipping Point’

I’m not going to talk about the fact that a Seek will turn into a Scan if the Seek is not selective enough, because that’s just not true. A Seek of a non-covering index, one that then requires lookups to get the rest of the required information will switch to using a covering index, even if that index is not ideal, if the number of lookups needed makes the ‘less ideal but covering’ index a less-costly option. This concept has nothing at all to do with Seeks and Scans. I can even make a Scan + Lookups turn into a Seek at a tipping point if you’re really keen… it’s entirely about the expense of Lookups.

So, Seeks have slightly more work to do, but this work is to make up for the indexes are typically ‘less-than-perfect’.

Whenever you need just a subset of an index, where that subset is defined by a predicate, then a Seek is going to be useful. But in a perfect world, many of our indexes can be pre-filtered to the rows of interest. That might be “active tasks” or “orders from today”, or whatever. If a query hits the database looking for this set of things, then a Scan is ideal, because we can choose to use an index which has already been filtered to the stuff we want.

So I don’t mind Scans. I don’t view them with the same level of suspicion as I do Seeks, and I often find myself looking for those common predicates that could be used in a filtered index, to potentially make indexes which are pre-filtered, and which are more likely to be scanned, because they have the 20 rows of interest (rather than seeking into a much larger index to get those 20 rows).

There’s more to this – I’ve delivered whole presentations on this topic, where I show how Scans can often make Top queries run quite nicely, and also how Seeks can tend to be called too frequently.

I don’t want you to start working to turn all your plans’ Seeks into Scans – but you should be aware that quite often, a Seek is only being done because your index strategy has space for improvement.

This Post Has 8 Comments

Spot on. One of the lesser-known tenants of the Big Data movement is the preference of scans over seeks for batch processing. I even heard a NoSQL vendor say that with the advent of FusionIO and such, there is no performance difference between seeks and scans or even sequential vs random IO. Not sure I’m ready to take that leap of faith yet tho.

You write:

"The ‘key data’ is anything that’s defined in the index key, plus enough extra data to make sure that each row is uniquely identifiable (if the index is a ‘unique index’, then it already has enough information, if not, then the clustered index key(s) are included – with uniquifier column if the CIX keys are not unique), and therefore searchable."

Are you sure about that? I think even a unique index contains the clustered keys. After all, how could you do a lookup to the clustered index without the clustered key?

In a unique index, the CIX keys are included at the leaf level. They’re not required on the other levels.

Hi Rob

What is about the index is very fragmented, for Seek it can get to any row easily no matter how fragmented the table is.

In that case fragmentation has no impact on performance.But if you do a scan (following the logical order) on a fragmented table, you can be jumping all over the to get the rows you need,instead of accessing them nice and neat and contiguously. What do you think?

Hi Uri,

I’m not suggesting that a Scan is better at locating a single row than a Seek. I’m suggesting a Scan across a filtered index is better than a RangeScan performed by a Seek, or frequently, than a large number of Seeks performed separately. Remember that a Seek performs a RangeScan which traverses the leaf nodes just like a Scan, but diving into a particular start point. If the index is filtered down to only the rows of interest, the Scan is less work than the RangeScan performed by a Seek – it doesn’t need to search for the start, or keep checking it’s in the range. It also reduces the impact of maintaining the index, as fewer rows need to be inserted / updated.

The jumping around that you can see because of fragmentation occurs just as much with RangeScans as with Scans. The perception that a seek is immune is wrong. A Seek simply scans a portion of the index. When people have low-selective predicates like "Price > 0" will cause a Seek which gives an illusion of improvement, but not much more.

Hi Rob,

Nice article and something that I fully endorse. Neither a seek nor a scan is inherently better. It all depends on the data and the required operation at hand. One thing I do disagree with is that you say that each row in a seek and range scan needs to be evaluated. While this can be true it isn’t necessarily true to my understanding. If the range is big enough SQL Server will evaluate the non-leaf level and decide that several pages in the index leaf meet the criteria. The first and last page require a check for every entry, however as I understand it SQL Server can just consume the intermediate pages (Between the first and last page) knowing that all records must match. I believe Paul Randal explained this in one of the MCM videos. My apologies to Paul and you if I’m incorrect. Can I get your thoughts on that?

Rows can be checked en masse using higher levels, but the point is that the engine needs to be considering whether each row is in the Range or not. In a range of 50k rows, I’m not saying that it’s 50k checks, just that it needs to know about each row.

Great post, and a great subject for exploring in the context of knee-jerk "optimisation" based on having just enough knowledge to be dangerous.