I know that everyone misses this, because I’m yet to find someone who doesn’t have a bit of an epiphany when I describe this.

When tuning Data Flows in SQL Server Integration Services, people see the Data Flow as moving from the Source to the Destination, passing through a number of transformations. What people don’t consider is the Source, getting the data out of a database.

Remember, the source of data for your Data Flow is not your Source Component. It’s wherever the data is, within your database, probably on a disk somewhere. You need to tune your query to optimise it for SSIS, and this is what most people fail to do.

I’m not suggesting that people don’t tune their queries – there’s plenty of information out there about making sure that your queries run as fast as possible. But for SSIS, it’s not about how fast your query runs. Let me say that again, but in bolder text:

The speed of an SSIS Source is not about how fast your query runs.

If your query is used in a Source component for SSIS, the thing that matters is how fast it starts returning data. In particular, those first 10,000 rows to populate that first buffer, ready to pass down the rest of the transformations on its way to the Destination.

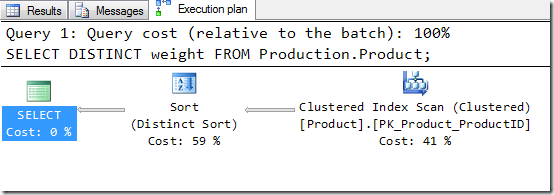

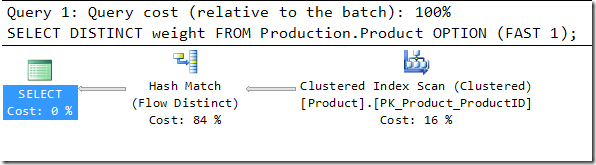

Let’s look at a very simple query as an example, using the AdventureWorks database:

We’re picking the different Weight values out of the Product table, and it’s doing this by scanning the table and doing a Sort. It’s a Distinct Sort, which means that the duplicates are discarded.

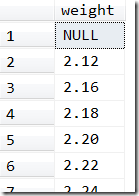

It’ll be no surprise to see that the data produced is sorted. Obvious, I know, but I’m making a comparison to what I’ll do later.

Before I explain the problem here, let me jump back into the SSIS world…

If you’ve investigated how to tune an SSIS flow, then you’ll know that some SSIS Data Flow Transformations are known to be Blocking, some are Partially Blocking, and some are simply Row transformations.

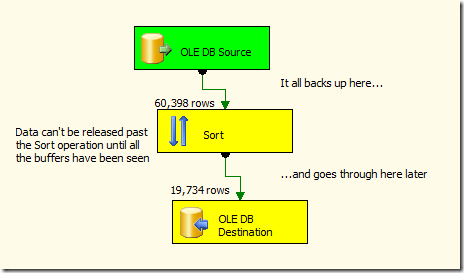

Take the SSIS Sort transformation, for example. I’m using a larger data set for this, because my small list of Weights won’t demonstrate it well enough.

Seven buffers of data came out of the source, but none of them could be pushed past the Sort operator, just in case the last buffer contained the data that would be sorted into the first buffer. This is a blocking operation.

Back in the land of T-SQL, we consider our Distinct Sort operator. It’s also blocking. It won’t let data through until it’s seen all of it.

If you weren’t okay with blocking operations in SSIS, why would you be happy with them in an execution plan?

The source of your data is not your OLE DB Source. Remember this. The source of your data is the NCIX/CIX/Heap from which it’s being pulled.

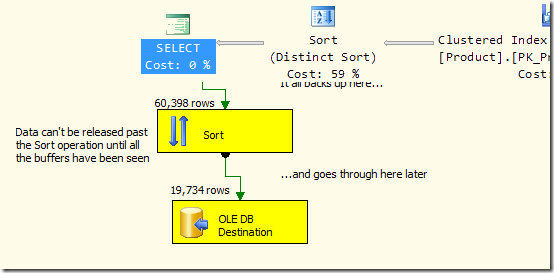

Picture it like this… the data flowing from the Clustered Index, through the Distinct Sort operator, into the SELECT operator, where a series of SSIS Buffers are populated, flowing (as they get full) down through the SSIS transformations.

Alright, I know that I’m taking some liberties here, because the two queries aren’t the same, but consider the visual.

The data is flowing from your disk and through your execution plan before it reaches SSIS, so you could easily find that a blocking operation in your plan is just as painful as a blocking operation in your SSIS Data Flow.

Luckily, T-SQL gives us a brilliant query hint to help avoid this.

OPTION (FAST 10000)

This hint means that it will choose a query which will optimise for the first 10,000 rows – the default SSIS buffer size. And the effect can be quite significant.

First let’s consider a simple example, then we’ll look at a larger one.

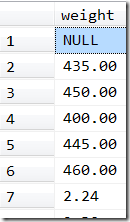

Consider our weights. We don’t have 10,000, so I’m going to use OPTION (FAST 1) instead.

You’ll notice that the query is more expensive, using a Flow Distinct operator instead of the Distinct Sort. This operator is consuming 84% of the query, instead of the 59% we saw from the Distinct Sort. But the first row could be returned quicker – a Flow Distinct operator is non-blocking.

The data here isn’t sorted, of course. It’s in the same order that it came out of the index, just with duplicates removed.

As soon as a Flow Distinct sees a value that it hasn’t come across before, it pushes it out to the operator on its left. It still has to maintain the list of what it’s seen so far, but by handling it one row at a time, it can push rows through quicker. Overall, it’s a lot more work than the Distinct Sort, but if the priority is the first few rows, then perhaps that’s exactly what we want.

The Query Optimizer seems to do this by optimising the query as if there were only one row coming through:

This 1 row estimation is caused by the Query Optimizer imagining the SELECT operation saying “Give me one row” first, and this message being passed all the way along. The request might not make it all the way back to the source, but in my simple example, it does.

I hope this simple example has helped you understand the significance of the blocking operator. Now I’m going to show you an example on a much larger data set.

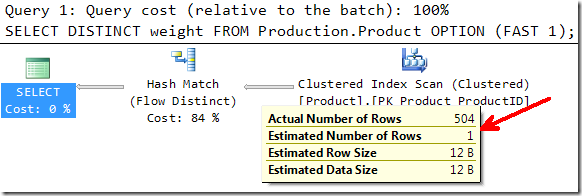

This data was fetching about 780,000 rows, and these are the Estimated Plans. The data needed to be Sorted, to support further SSIS operations that needed that.

First, without the hint.

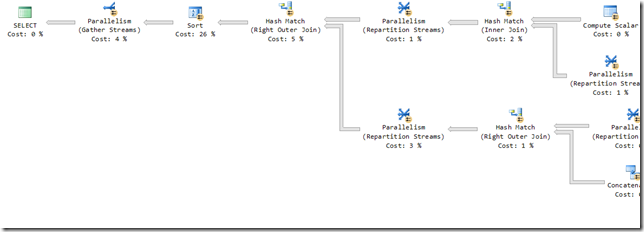

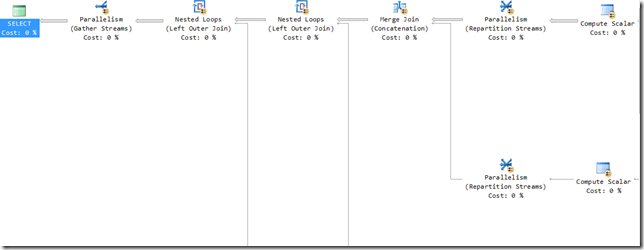

…and now with OPTION (FAST 10000):

A very different plan, I’m sure you’ll agree. In case you’re curious, those arrows in the top one are 780,000 rows in size. In the second, they’re estimated to be 10,000, although the Actual figures end up being 780,000.

The top one definitely runs faster. It finished several times faster than the second one. With the amount of data being considered, these numbers were in minutes. Look at the second one – it’s doing Nested Loops, across 780,000 rows! That’s not generally recommended at all. That’s “Go and make yourself a coffee” time. In this case, it was about six or seven minutes. The faster one finished in about a minute.

But in SSIS-land, things are different.

The particular data flow that was consuming this data was significant. It was being pumped into a Script Component to process each row based on previous rows, creating about a dozen different flows. The data flow would take roughly ten minutes to run – ten minutes from when the data first appeared.

The query that completes faster – chosen by the Query Optimizer with no hints, based on accurate statistics (rather than pretending the numbers are smaller) – would take a minute to start getting the data into SSIS, at which point the ten-minute flow would start, taking eleven minutes to complete.

The query that took longer – chosen by the Query Optimizer pretending it only wanted the first 10,000 rows – would take only ten seconds to fill the first buffer. Despite the fact that it might have taken the database another six or seven minutes to get the data out, SSIS didn’t care. Every time it wanted the next buffer of data, it was already available, and the whole process finished in about ten minutes and ten seconds.

When debugging SSIS, you run the package, and sit there waiting to see the Debug information start appearing. You look for the numbers on the data flow, and seeing operators going Yellow and Green. Without the hint, I’d sit there for a minute. With the hint, just ten seconds. You can imagine which one I preferred.

By adding this hint, it felt like a magic wand had been waved across the query, to make it run several times faster. It wasn’t the case at all – but it felt like it to SSIS.

This Post Has 51 Comments

Superb post Rob, and thank you for taking the time to write it up in such detail.

JT

I agree with Jamie, this is an excellent post. More technical stuff please, Rob 🙂

Paul

Thanks, Rob – awesome post!

Great post thanks Rob.

Time to review a couple of my existing source queries!!

Ah ha, thats what it was….

great post!

first time i seen the FAST option.

i’m not a SSIS heavy user, but this certainly has other uses

Excellent! This option also gives the developer more freedom to possibly do more transformations using TSQL, whereas when you have a huge data source, often the choice is to avoid any extra work on the SQL Engine. Thanks!

That’s really interesting! It’s another way of looking at thinking about what your customer/consumer actually needs, rather than just doing what the normal approach is.

Dant: Indeed – I used SSIS because it’s commonly used, but the same principle applies to any streaming of data.

Everyone: Thanks for your compliments! I just hope it makes people think… 🙂

That was a great technical example. Please keep them coming.

Thomas

Thanks Rob. Pure magic.

Hi Rob,

Awesome tip! And yes, I was one of the people that missed this.

:{>

Thanks a million Rob 🙂 Nice article

Cheers Rob, I never even knew this existed! Good stuff 🙂

Being a relational engine performance tuning consultant I just have to say that this type of activity can CRUSH a SQL server so be VERY careful with it’s usage unless you have COMPLETE control of everything that is (trying to) happening on the box!!

No worries everyone. 😉

Kevin: Agreed – especially if "this type of activity" is SSIS in general, which can be known to create cursors for inserts, or update a million rows one…at…a…time. And if they decide to turn on Transactions, then the locking can be just nasty.

I don’t want people to put the FAST 10000 hint on all their Source Queries. Instead, I want people to think about their execution plans as the first part of the data flow, and consider what kind of optimising can be done on that – remembering that it’s not always best to pick the query that will finish first.

You’ll know as well as anyone that sometimes the fastest queries can be more expensive on the system because of locks, parallelism, tempdb usage, and other factors – and that choosing a fast plan is not always as ideal as choosing a plan which is less CPU intensive, or less IO intensive, etc. We’ve all seen cases where we hurt an individual query (eg, MAXDOP 1-ing it) for the sake of concurrency.

Your point is excellent – thank you for making it. Far too often, people tune individual situations without giving thought to the rest of the story. Just today I was talking to a fellow MVP about the performance impact of FKs. They do have a negative impact on the performance of inserts, updates and deletes, but under consideration of the benefits, we generally choose to keep them. I hope people consider the FAST 10000 as a useful tool, but not see every query as the proverbial nail.

Great article!

I knew optimising your sources was important in SSIS, but I had never thought about the FAST option. Great tip!

I’ve been out of commission for several weeks… Finally read your blog post today…

EXCELLENT stuff, Rob! Keep ’em coming!

–Brad

Great post! As someone still learning the ropes of SSIS this was great for me to learn and think about. Tried it out briefly on a data flow that was pulling 6.7 million rows and I don’t know if it was my imagination but it "seemed" to go faster when watching it pass rows inside BIDS. I’ll have to try it out more later, thanks again for great post and keep up the good work!

Thanks guys.

Jorge – make sure you look at the execution plan of your query and investigate if it’s helping, and consider the suitability of the hint.

If you have a blocking transformation in your SSIS flow, the data won’t be released past it until all the data has reached it. This means that FAST won’t help the speed of the SSIS package, only how fast buffers start appearing. In fact, it’s quite likely to make it slower.

It’s all about managing the bottlenecks, and this post is giving a suggestion to those people who haven’t considered that the flow really starts in the execution plan.

I’ll try to put a few more posts together about blocking operations, and the similarities between tuning SSIS Data Flows and tuning queries.

Very nice post Rob! As you’ve pointed out, the FAST option certainly has its use in specific situations.

Looking forward to your upcoming posts 🙂

Best regards, Valentino.

Hi Rob, Could you please put some documents regading how to speed up the import from oracle to Sql server.

Thanks,

Mona

Rob,

this is a great post!

Do you have any idea about how to do this if you have a CTE as a query for your data source?

Feodor

Hi Rob,

Great article. I’ve managed to cut our data warehouse load time by 50% thanks to your tips.

Cheers

Feodor – Using a CTE in a query makes little difference to the way it runs, it’s pretty much the same as using a view.

David – Great news! That’s the kind of comment I love!

great post

Brilliant,

A STG package that took 08:40m now runs in 21 seconds.

Simply by adding this 1 line to the end of the query.

That is about 25 times faster.

Thanks man.

Sorry, 08:40:00 against 00:00:21.

That is about 1500x rather than 25x

(before we had a Buffers Spooled = 1 problem)

Karel, I’m pleased it helped!

Thanks for keeping this posted. You have changed my life. My pkg wrote 183,000 records in about 12 minutes vs. the same 183,000 in less than 2 seconds!! Now I will no longer have time to get coffee anymore. ;o)

This is a great IT example of how bottlenecks are explained in the excellent business book "The Goal". IMHO, it should be read by anyone doing data movement!

So useful topic.superb explanation.Thanks!

Awesome tip. I have a related question. First I want to share some data

My Performance Analysis:

Table config:

# of rows in source query – 945,000

size: 556 MB

HINT | DefaultBufferMaxRows | Time

OPTION (FAST 100) | 10,000 | 1:16

OPTION (FAST 100) | 100,000 | 1:16

OPTION (FAST 1000)| 100,000 | 1:54

OPTION (FAST 1000)| 500,000 | 1:54

OPTION (FAST 100) | 500,000 | 1:16

OPTION (FAST 1) | 500,000 | 1:16

OPTION (FAST 1) | 50,000 | 1:33

OPTION (FAST 100) | 50,000 | 1:33

I am trying to make sense out of this data. I understand the OPTION (FAST XX) but not clear how buffer is being used and why it makes a difference.

So my question is:

Is there a way (if yes then how) to find out (other than just experimenting) that what are the optimal values for:

OPTION FAST

DefaultBufferMaxRows, DefaultBufferSize

Rows per batch, Maximum insert commit size

Another thing to add to above question…

How do you know when to use modulo approach to divide the data source so they can be processed in parallel.

When I added the modulo to best scenario above and divided the data into 3 groups I gained some more performance

OPTION (FAST 100, MAXDOP 1) | 10,000 | 1:03 (key % 3 = 0, key % 3 = 1, key % 3 = 2)

Hi Sam,

I find that setting FAST to be the same as the buffer size can help, as you’re telling it to fill up that first buffer as quickly as possible, but sometimes smaller FAST values can help encourage Nested Loops, which can be faster in some situations.

But the details about how large the buffer size should be are blurry and dependent on a lot of factors.

In your second comment, you mention parallelism, but also use MAXDOP 1. It’s definitely worth working out ways that you can encourage parallelism in your main query as well as in SSIS. As far as "how do you know when…?" is concerned, it’s really down to what you are seeing as the bottleneck.

Ultimately, it’s good that you’ve tried a few options to see what works for you.

Rob

Thanks Rob for a quick response. This is a great post. Good Job!

How is the DISTINCT clause in your query going to prevent duplicates in the destination data source if you are pulling and inserting the first 10000 rows already?

Hi JQ – I’m not sure I fully understand your question. The point of the post is to explore the impact of removing blocking operators from the query acting as the source. I used DISTINCT to produce a quick example of a blocking operator. There are various ways to prevent duplicates in your destination, but that’s more about how you handle your inserts than how you pull the data out of the source, as the source would have no idea what’s already in your destination.

after all my source has 36 million records but in one batch it fetches only 2,00,000 records only, how can i improve data retrieval rate if we have to join 9 to 10 tables in source query.

36M rows is quite a bit, but 18 batches isn’t many. Hopefully there aren’t too many things like Sorts in there. I would suggest you explore general tuning, as the difference between performance for pulling 2M compared to 36M probably isn’t going to produce a very different plan.

I suppose FIRST_ROWS hint is the Oracle equivalent MSSQL OPTION FAST, but has anyone tested it? If so, I’d be interested to see the results. (Too busy to test it myself at this time, sorry.)

WOW

Rob, this reduced the duration of one of the loads here by 70%!! Realize this is a 5 yr old post but your still helping out with this. Thank you.

Hi Rob,

Will this still apply in SSIS 2016 with the Data Flow Buffer Auto Sizing capability ?

Thanks

Rich

Hi Richard

Good question,

DId you get an answer?

Thanks

Ben & Richard,

That setting makes no difference to the way the query for the data source runs. What that setting does is to set the buffer size entirely according to the DefaultBufferMaxRows setting, rather than restricting it to DefaultBufferSize. It’s essentially like giving DefaultBufferSize an “unlimited” value.

Absolutely fantastic and quite evident…

This is a great tip for optimization.

I was using this in SQL and not in SSIS.

Riddle me this, cause i like what your saying here….

Whats the secret to loading CLOBS from an Oracle datasource to a SQL Server destination?

cause this Hint has no effect…

Didn’t know I could apply this in SSIS,

I’m getting better speeds in my data transfer now. So helpful.

Thank you .

Pingback: What were you thinking, Rob? - LobsterPot Blogs