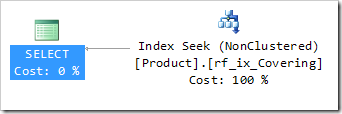

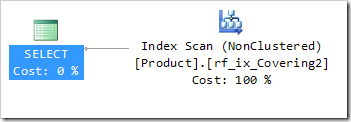

Take a look at this query plan.

Yes, that arrow indicates a single row. This is an Index Seek, returning a single row. And yet it’s rubbish. That’s right – it’s rubbish!

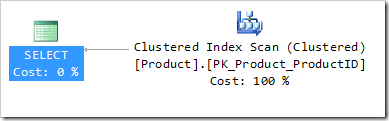

In fact, I had to provide a hint for it to use this index. A table scan would’ve been better, and this is what happens without the index hint.

Let’s look at the query. I can promise you there’s no bug here.

|

1 2 3 4 5 |

SELECT Name, ProductNumber FROM Production.Product WHERE DaysToManufacture < 4 AND ReorderPoint < 100 AND Color = 'Red'; |

And the covering index is defined as follows:

|

1 2 3 |

CREATE INDEX rf_ix_Covering ON Production.Product(DaysToManufacture) INCLUDE (Name, ProductNumber, Size, ReorderPoint, Color) WITH (FILLFACTOR=30); |

Try it yourself on AdventureWorks, you should see the same.

Of course, there’s a bit of trickery going on. For example, I purposely spaced out the NCIX using FILLFACTOR=30. But despite that, most DBAs would tell you that the first plan is better than the second. But they’d be wrong.

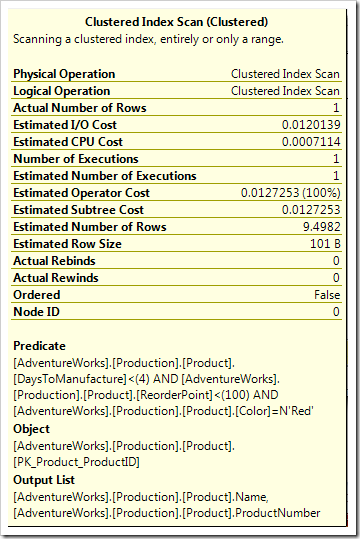

Let’s look at the tooltip of each of those Index operations. First, the Clustered Index Scan.

There’s no real surprise here. We know there’s only one row being returned, I told you that before. There’s a predicate listed which is tested on every row, and the Name and ProductNumber are the two fields which are being outputted. Estimated Subtree Cost of 0.0127253.

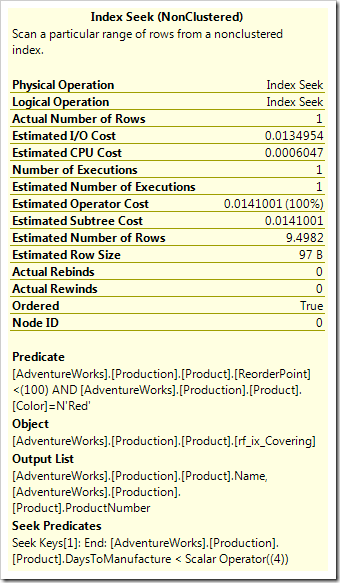

How about that Seek then, which the Query Optimizer only chose when its hand was forced by a hint?

You’ll probably notice immediately that the Estimated Subtree Cost is higher! 0.0141 instead of 0.0127. The reason why is in the Predicate and Seek Predicate sections. Sure, it can do a Seek – it can seek on the first key column of the index, on DaysToManufacture. But having found those records, the data is still very much in DaysToManufacture order. We can’t search on those predicates very easily at all, and we’re left with them being handled as a Residual Predicate.

To use a phone-book example, this is like searching for people called “Rob F.” – I can easily find people whose last name starts with F, but would then have to go through all of them looking for people called Rob. That residual check could be painful. Actually, this particular example is more like saying “Find me anyone called Rob whose surname is < Z”, as the Seek Predicate involved returns most of the table.

Unfortunately, the query plan doesn’t show us how many rows go through the Residual Predicate check. If it did, we’d be able to see that it’s over 400 rows (in a table of 504 rows), and we might rethink our indexing strategy.

In fact, I can easily make an index which causes the same query to perform a full Index Scan, plus Lookup, and which is half the cost of either of the plans we’ve seen so far.

|

1 2 3 4 |

CREATE INDEX rf_ix_NotCovering ON Production.Product(DaysToManufacture) WHERE DaysToManufacture < 4 AND ReorderPoint < 100 AND Color = 'Red'; |

Of course, now I’m really cheating, by using a Filtered Index which would only contain a single row. And I could’ve eliminated the Lookup by including the other columns, halving the cost yet again.

|

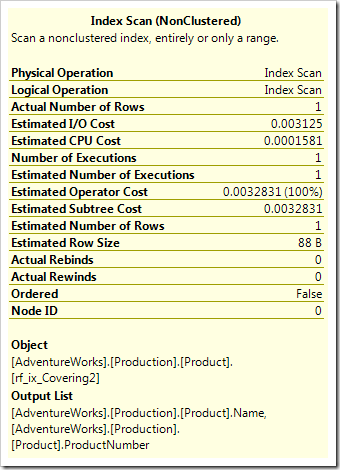

1 2 3 4 5 |

CREATE INDEX rf_ix_Covering2 ON Production.Product(DaysToManufacture) INCLUDE (Name, ProductNumber) WHERE DaysToManufacture < 4 AND ReorderPoint < 100 AND Color = 'Red'; |

Interestingly, the tooltips for both of these Filtered Index operations don’t show the predicates – the Query Optimizer knows that the index has already done the necessary filtering, and in this case, it doesn’t need to apply any further predicates, whether Seek or Residual.

So… just because an index is covering a query, doesn’t mean it’s necessarily the right choice. The better question is “Is this index good for this query?”

(Incidentally, this is the kind of thing that I’d go through in my pre-conference seminar at SQLPASS if chosen, and in the talk on “Joins, SARGability and the Evils of Residualiciousness” if that one gets picked)

This Post Has 22 Comments

Very interesting!

It caught me out dam it 🙂 It would seem then that covering is not always king.

The problem with examples is that they are often not very representative of real world. I doubt very much if I’d even glance at a singleton select, seek, scan or table scan. It’s interesting I agree, it would make a good interview question but in the overall scheme of performance tuning of multiple table, multiple query procs a difference of .0014 is unlikely to make much difference. The only point i’d perhaps make, is that a clustered seek/scan returns the entire row wheras the covered idnex will only return the rows in the index – on a wide table this might or might not be significant – it might show in the cost of course.

Colin,

But that single-row returning Seek is hiding the truth. It’s closer to a scan than a seek really, because the Seek Predicate is so non-selective. It’s pulling back over 400 rows, applying a residual predicate to work out what’s left. Interestingly, a non-covering index may have shown this more obviously.

I’m all for Covering Indexes that return a single row, but it’s important to recognise whether that row is being found by the Seek Predicate or the Residual Predicate.

Oh yes I do agree and you’re right – I have example I use in presentations which show as a clustered index seek but actually perform a full scan – but it only becomes obvious if you check the number of page reads. And yes you’re right most would say a seek is better than scan, but if you saw either of these within a query plan involving a number of queries you’d probably not give them a second glance. ( compared to more complex queries ) I enjoy these types of posts so much because it makes you think about the basics, which is very important, so thankyou.

🙂 You’re welcome, Colin.

Good point … but I see it as obvious based on the number of rows in the table. If the same test is done on a different table with millions of records then the result would be different.

Hi Ranjith – which test do you mean? The principles I’ve shown here will apply no matter how big the table.

1) have you updated ALL stats on that table with FULL SCAN?

2) IIRC, AdventureWorks uses unicode character storage. Try your query like this:

SELECT Name, ProductNumber

FROM Production.Product

WHERE DaysToManufacture < 4

AND ReorderPoint < 100

AND Color = N’Red’;

3) What are the estimated rowcounts for each test you did and how do they compare to actual rowcounts?

Results from above?

Hi Kevin,

The estimated rowcounts are just fine. The issue is the ability to seek on more than the first predicate, which is not very selective – plus the fact that I’ve padded the index to make it larger than the CIX. I’m trying to demonstrate that just because a Seek operation returns a single row, that doesn’t mean that it’s efficient at all. It’s like there are two components to a Seek – the Seek part and the Residual part. If the Residual is doing most of the work, the Seek is not much different to a Scan.

Rob

Rob,

Really nice post.

Got your point.

One doubt, Among the three predicates, if we have put the more selective column first in the index, the QO might have opted index seek. right?

I bumped up the fill factor for the orignal rf_ix_Covering to 100 and got an Estimated Subtree Cost of 0.0669. What are the fill factors of the other indices that you’ve used as examples?

Roshan & Andrew,

Yes, if the index were more selective, or packed more tightly, then it could well have been chosen, and rightly so. I was contriving an example to show that indexes that look appealing with Seeks and low number of rows returned could well be hiding a hidden cost, and that if the Seek Predicate is not particularly selective, then it could be little better than an Index Scan – as demonstrated by the fillfactor impact. I picked 30% thinking that despite being non-clustered (and therefore fewer columns at leaf level), this could make the range satisfied by the Seek Predicate require more reads than performing a CIX scan.

So yes – it’s slightly contrived, but to demonstrate an important point about the cost of non-selective Seek Predicates, even on Covering Index Seeks that output a single row. Many people see an Index Seek with low output and no lookups as the goal of performance tuning, but it’s simply not the case.

Oh, and Andrew – the other fillfactors were 100%, but as mentioned in the post, the filter index had only one row, so fillfactor isn’t going to be particularly relevant. I did admit to "a bit of trickery", after all (but only to demonstrate a valid point).

What if the index matches all WHERE conditions?

CREATE INDEX rf_ix_Covering ON Production.Product (DaysToManufacture, ReorderPoint, Color)

INCLUDE (Name, ProductNumber, Size)

WITH (FILLFACTOR=30)

or this with equality column first?

CREATE INDEX rf_ix_Covering ON Production.Product(Color, DaysToManufacture, ReorderPoint)

INCLUDE (Name, ProductNumber, Size)

WITH (FILLFACTOR=30)

The index above isn’t covering in the sense that I understand because filter/key columns don’t match the query. You are filtering unsorted INCLUDE columns

Hi gbn,

Yes, there are benefits to having the equality predicate’s field as the leading key. But that’s pretty much it. Even though the covering index looks good according to the plan, the fact that the Seek Predicate is so non-selective is a problem. I know there are better indexes available (the best being a filtered index), but I’m showing that just because you have a Seek on a Covering Index, you don’t necessarily have an effective index.

Rob

The optimiser has just found an index that matches roughly and decided to use it.

It isn’t really covering for this query, more "convenient".

So in that sense, yes, the seek is useless because it is a scan.

But you admit that you had to provide an index hint to get the optimiser to use this misleading index, so what exactly are we proving here? Yes, there are (artificcial) situations where a single-row seek can require more effort than is apparent at first glance, but since the optimiser doesn’t actually choose such options unless you make it I’m not sure how surprising the information is here?

Hi Phil,

There are plenty of situations where people try to force indexes to be used because they want a Seek that returns a single row, because they assume that it’s an ideal operation. That’s what I’m trying to challenge.

Rob

Hi Rob,

I have a table, having non cluster index say(id,date), but when I am running a query

select id,date from table where id = 1 and date = ‘2010-09-09’

its always performing index scan, while returning only one row..

so why this is happening, when my index is selective only one row itis returning, then why scan also it involvs 98 logical redas..

also this index is not unique, both id and date have duplicate values, but this combination of date and id has only single row.

Can you send me an email with the execution plan in it? I’m sure there must be something else going on, as I see little reason in what you’ve written for it to scan rather than seek. You can reach me at hotmail.com, as rob_farley.

That NC index is a pretty weak index though. The index itself (on DaysToManufacture) isn’t selective in the first place, regardless of whether or not the included columns make it cover the query.

I suppose the main issue is how well the QO discerns what’s going on.

The NC index is designed to be weak – that’s why I had to use a hint to get it to be used in the first case. But the point about having an ineffective index holds. People frequently kill the impact of a composite index by using an inequality, or apply a function so the index key can’t be used at all – and if there’s a leading key which can be used, an Index Seek which produces a small number of rows is seen. It looks good, but isn’t.

But the impact of this can be seen using trace flag 9130, or now with later service packs on 2012. http://sqlblog.com/blogs/rob_farley/archive/2015/12/12/a-new-superpower-for-sql-query-tuners-number-of-rows-read.aspx