For the blog post that I’ll publish tomorrow, I wrote a query that I thought needed a blog post all of its own. This is that post.

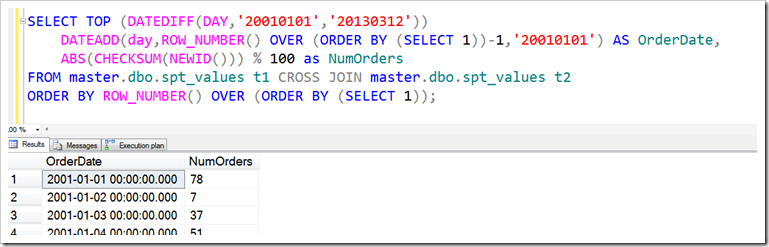

The query was this one. Its results aren’t that interesting, it’s just a list of dates with a random number between 0 and 99. Just some sample data that I thought I’d use.

|

1 2 3 4 5 |

SELECT TOP (DATEDIFF(DAY,'20010101','20130312')) DATEADD(day,ROW_NUMBER() OVER (ORDER BY (SELECT 1))-1,'20010101') AS OrderDate, ABS(CHECKSUM(NEWID())) % 100 as NumOrders FROM master.dbo.spt_values t1 CROSS JOIN master.dbo.spt_values t2 ORDER BY ROW_NUMBER() OVER (ORDER BY (SELECT 1)); |

So, let me give some background…

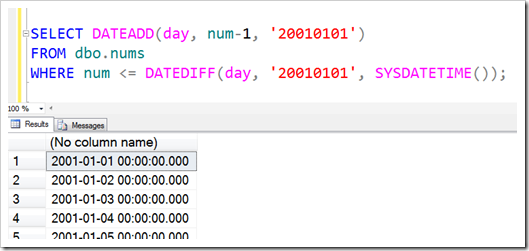

When teaching about T-SQL, I often point out that a nums table is tremendously useful. One of its uses is to make a dates table, which can be really handy in a bunch of other ways, like in data warehouses. The idea is simple, assuming you have a table of numbers which starts from either 0 or 1 (I don’t really care, although I start mine from 1). Here I figure that you want to get dates from between 20010101, up to (but not including) today.

|

1 2 3 |

SELECT DATEADD(day, num-1, '20010101') FROM dbo.nums WHERE num <= DATEDIFF(day, '20010101', SYSDATETIME()); |

I’m not going to show you the results, I figure that it’s easy for you to picture a list of dates.

Oh, alright then.

Anyway, moving on.

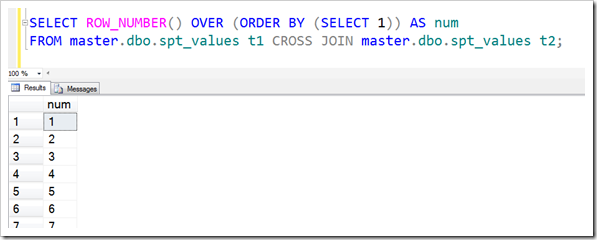

In this case, I didn’t have a nums table handy, and for that, I tend to use ROW_NUMBER() and the table master.dbo.spt_values. This table contains a bunch of rows of handy reference data. I wasn’t interested in the contents though, I just wanted to have enough rows, and it’s quick to reference that rather than writing a bunch of self-joining CTEs. There’s over 2000 rows (2515 to be exact) in master.dbo.spt_values, and if I want to have up to 4 million, I just do a cross join to itself.

|

1 2 |

SELECT ROW_NUMBER() OVER (ORDER BY (SELECT 1)) AS num FROM master.dbo.spt_values t1 CROSS JOIN master.dbo.spt_values t2; |

This query gives me a lot of rows, of course… but if I use it in a sub-query (or CTE) and filter it, then the simplification work that the Query Optimizer does will mean that it doesn’t try to work out all 4 million rows for me, it’ll stop when it’s seen enough.

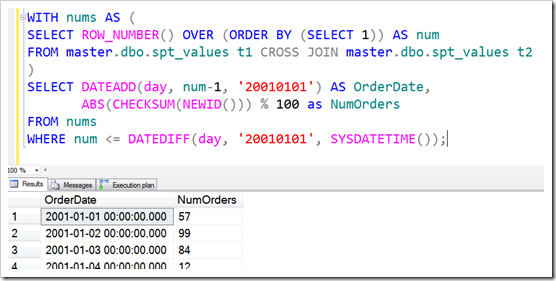

As an example, I can use this in conjunction with my earlier query, which had a WHERE clause.

|

1 2 3 4 5 6 7 |

WITH nums AS ( SELECT ROW_NUMBER() OVER (ORDER BY (SELECT 1)) AS num FROM master.dbo.spt_values t1 CROSS JOIN master.dbo.spt_values t2 ) SELECT DATEADD(day, num-1, '20010101') FROM nums WHERE num <= DATEDIFF(day, '20010101', SYSDATETIME()); |

This produces my rows in less than a second, giving the same results as when we had a physical nums table.

To include my random values, I’m using the commonly found randomizing method of ABS(CHECKSUM(NEWID())) % 100. RAND() is no good, it just produces the same value for every row. NEWID() is much better, but it’s not a number. CHECKSUM solves that, but can be negative. ABS will wrap that up nicely and give a random number in a large range. Mod 100 solves that.

|

1 2 3 4 5 6 7 8 |

WITH nums AS ( SELECT ROW_NUMBER() OVER (ORDER BY (SELECT 1)) AS num FROM master.dbo.spt_values t1 CROSS JOIN master.dbo.spt_values t2 ) SELECT DATEADD(day, num-1, '20010101') AS OrderDate, ABS(CHECKSUM(NEWID())) % 100 as NumOrders FROM nums WHERE num <= DATEDIFF(day, '20010101', SYSDATETIME()); |

This works nicely, and is a common pattern for dealing with this kind of thing.

But it’s not the query at the top of this post. That was done without a CTE, and used TOP instead, ordering by the ROW_NUMBER().

Let’s have a look at it.

|

1 2 3 4 5 |

SELECT TOP (DATEDIFF(DAY,'20010101','20130312')) DATEADD(day,ROW_NUMBER() OVER (ORDER BY (SELECT 1))-1,'20010101') AS OrderDate, ABS(CHECKSUM(NEWID())) % 100 as NumOrders FROM master.dbo.spt_values t1 CROSS JOIN master.dbo.spt_values t2 ORDER BY ROW_NUMBER() OVER (ORDER BY (SELECT 1)); |

We can see that the FROM clause is the same as in our nums CTE. And the same randomising bit for NumOrders is in there.

But instead of using ROW_NUMBER() OVER (ORDER BY (SELECT 1)) to define a num column, we’re using it within another function? Can we do that? Yes. ROW_NUMBER() can only be used in the SELECT clause and in the ORDER BY clause, and there are restrictions about using it within aggregate functions as you might expect, but here we’re just using it as a value which changes with every row, and there’s really no problem at all.

We don’t have a filter though. In our CTE version, we used a filter to make sure we weren’t using every row from our CROSS JOIN. Here, we’re using TOP instead. But not TOP with some number – TOP with a function in it! This has been possible for a while, and it even supports sub-queries that produce numbers, in the form (including the double-bracket): SELECT TOP ((SELECT SomeVal … )) Col1, Col2…

TOP appears in this query because I needed to limit the number of rows coming back. I couldn’t use the WHERE clause, because I didn’t have anything to filter on. So I used TOP, and had to use a function in there.

So let’s compare the plans.

To be fair, I’ll use the fixed date in both queries (for now).

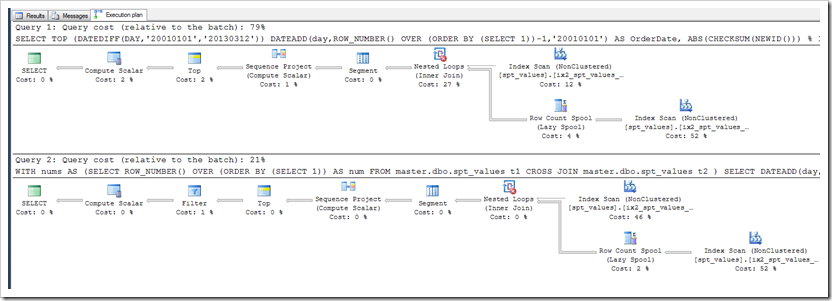

Oh, how disappointing! My TOP query is 79% of the batch, and the CTE version is 21%. Clearly my new one is 4 times as bad, and I should give up on it.

Actually, if you run these two queries on your own machine, you’ll see the first one isn’t 4 times as bad at all. It’s actually FASTER than the second. Something’s going on, and we should find out what.

The plans look remarkably similar. In fact, the second one is identical, but has an extra Filter operator. I don’t have a Filter in the TOP one, but I didn’t really expect it to make that much difference.

Otherwise, the plans look pretty similar. They both use a Row Count Spool, have a Sequence Project to work out the ROW_NUMBER, and they both use a Top operator – even the second one which doesn’t use TOP.

But you see, the Query Optimizer would have seen that I was filtering on a column that mapped to ROW_NUMBER(), and that I was doing a “less than” operation there. That’s like doing a TOP, and the Query Optimizer sees benefit in this. It doesn’t explain what’s going on though with the “4 times worse” thing though.

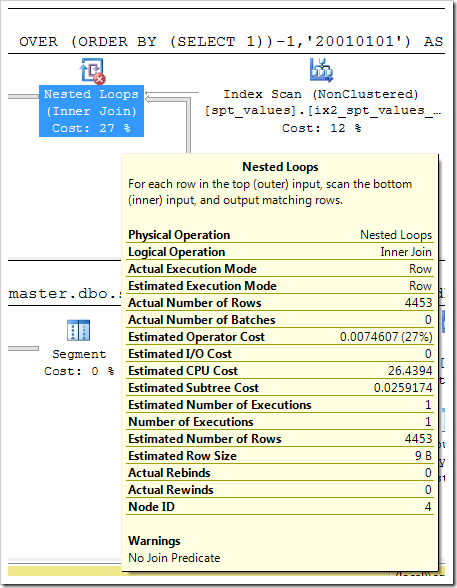

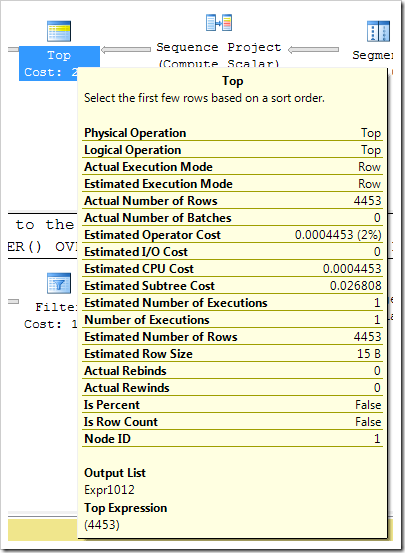

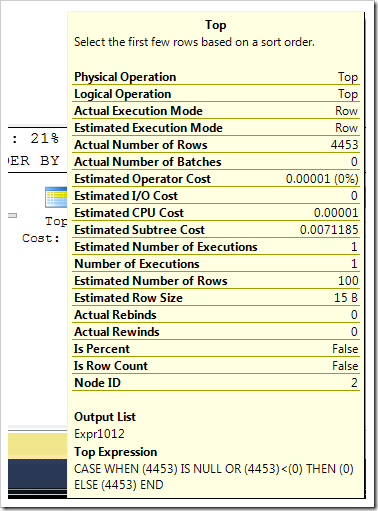

Let’s examine some of the numbers. Interestingly, we see that the Nested Loop operator expects to do almost no work in the second plan, and 27% in the first. There’s a warning on there – that’s just because I’m using a CROSS JOIN, and I’m okay with that.

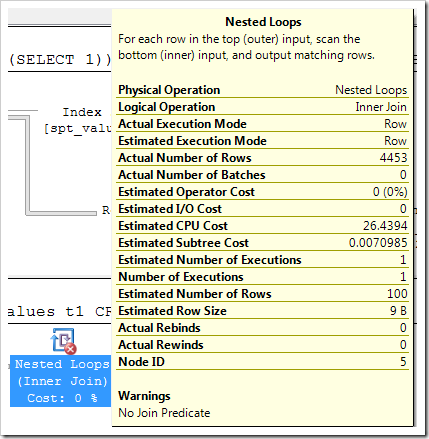

The word ‘expect’ was very important in that last paragraph. The percentages there are based on the work that is expected to be done. Let’s look at the properties of the two Nested Loop operators.

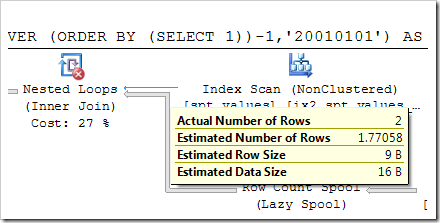

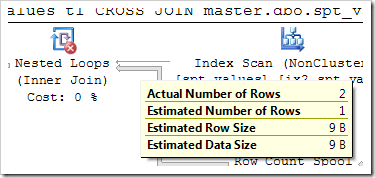

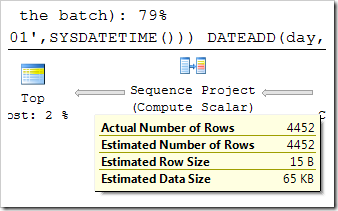

Glancing down here, we see a lot of it is the same, but the Estimated Number of Rows in the first one is 4453 (which is correct), while the second one is only 100 (not quite so correct). The arrows on the upper side of the Nested Loops show the effect of this.

The second one figures it’ll have seen all the rows it needs to see before it gets a second row from the first table, whereas the second one things it might need 1.77058 rows (being 4453/2515). No wonder the second query thinks it’s quicker.

Let’s see where this 100 estimate comes from though. Perhaps it’s that filter?

Based on the estimates of the rows going into the filter and coming out of it, it expects that the filter will see 100 rows and return just 30. This is bad too, but it’s not as bad as the 100 v 4453 impact.

This thing actually comes from the way that the Query Optimizer has turned the WHERE clause into a TOP clause. It figures there’s some doubt there, and guesses that 100 rows is probably not a bad place to start. When we give an explicit value (even using the DATEDIFF function), it can figure out what’s there and use this value. The second query goes part way there and works out that the result of the DATEDIFF is 4453, but simply doesn’t apply it fully to the Top operator, leaving us with that guess.

It’d be nice if it could tell that 4453 is never NULL, and simplify this out a bit more, but it simply doesn’t do this.

To round the post off, let’s consider what happens if we’re using SYSDATETIME() instead of the constant.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

SELECT TOP (DATEDIFF(DAY,'20010101',SYSDATETIME())) DATEADD(day,ROW_NUMBER() OVER (ORDER BY (SELECT 1))-1,'20010101') AS OrderDate, ABS(CHECKSUM(NEWID())) % 100 as NumOrders FROM master.dbo.spt_values t1 CROSS JOIN master.dbo.spt_values t2 ORDER BY ROW_NUMBER() OVER (ORDER BY (SELECT 1)); WITH nums AS (SELECT ROW_NUMBER() OVER (ORDER BY (SELECT 1)) AS num FROM master.dbo.spt_values t1 CROSS JOIN master.dbo.spt_values t2 ) SELECT DATEADD(day,num-1,'20010101') AS OrderDate, ABS(CHECKSUM(NEWID())) % 100 as NumOrders FROM nums WHERE num <= DATEDIFF(DAY,'20010101',SYSDATETIME()); |

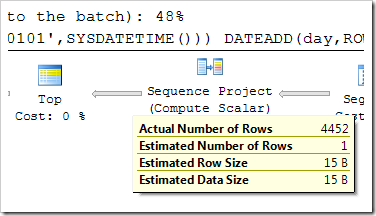

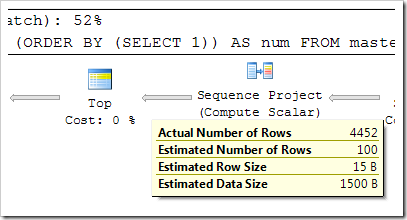

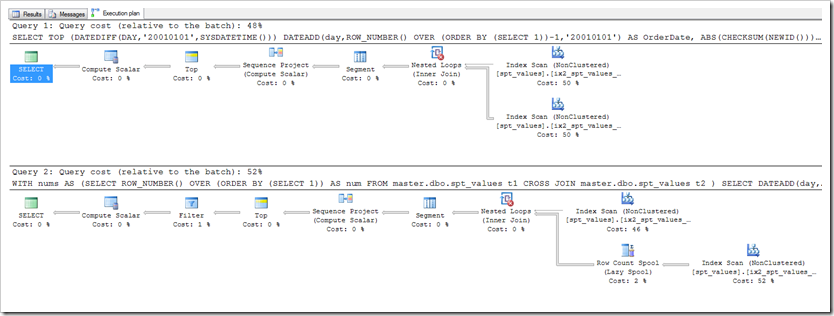

Oh! Now the first one is simpler still, leaving out the Row Count Spool operator, and thinking it’s going to be cheaper than the second one. Having not trusted that figure before, does this mean the first one is actually worse? Well, we have an idea about where to look – the estimates on some of the arrows, particularly near the Top operator.

Oh no! Our first query thinks there’s now only one row going to be coming through. How awful! (Oh, and the number 4452 is fine, because I’m actually running this on March 11th, not March 12th, it’s just that March 12th is T-SQL Tuesday this month, which is what I was writing the query for).

If you run this on your machine, hopefully you saw something different. Really.

You see, this problem has kinda been fixed, and if you enable the documented traceflag 4199, it should be better – for the first query at least.

By turning on trace flag 4199, and telling it to ignore what’s in the cache, it will evaluate SYSDATETIME() for that particular execution, and therefore come up with the right value for the Top operator. It doesn’t fix the WHERE clause version, but it does solve the TOP clause version.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

SELECT TOP (DATEDIFF(DAY,'20010101',SYSDATETIME())) DATEADD(day,ROW_NUMBER() OVER (ORDER BY (SELECT 1))-1,'20010101') AS OrderDate, ABS(CHECKSUM(NEWID())) % 100 as NumOrders FROM master.dbo.spt_values t1 CROSS JOIN master.dbo.spt_values t2 ORDER BY ROW_NUMBER() OVER (ORDER BY (SELECT 1)) OPTION (QUERYTRACEON 4199, RECOMPILE); WITH nums AS (SELECT ROW_NUMBER() OVER (ORDER BY (SELECT 1)) AS num FROM master.dbo.spt_values t1 CROSS JOIN master.dbo.spt_values t2 ) SELECT DATEADD(day,num-1,'20010101') AS OrderDate, ABS(CHECKSUM(NEWID())) % 100 as NumOrders FROM nums WHERE num <= DATEDIFF(DAY,'20010101',SYSDATETIME()) OPTION (QUERYTRACEON 4199, RECOMPILE); |

The reason why I say this shouldn’t’ve happened on your system is because you’re probably using trace flag 4199 as a start-up parameter.

So there you have it… a query which might seem strange at first glance, but is actually a really nice alternative. Don’t be afraid to use expressions in your TOP clause – it’s a very powerful mechanism, and TOP is a great operator to see in your plans (because they will ask for fewer rows from your Scans and Seeks – remember how execution plans suck?). As is often the case, we’re tripped up by estimates being out, but if you can see what’s actually going on, you should be able to make good decisions about how to form your queries.

This Post Has 6 Comments

So what’s the recommendation regarding that trace flag? Apply it by default on new servers?

Is it meaningful to 2012? the article does not list anything regarding 2012.

All the scripts here are from SQL 2012. I would suggest that 4199 should be applied by default on new servers, yes. By all means do some testing though.

http://support.microsoft.com/kb/974006 says:

"Starting with Microsoft SQL Server 2000 Service Pack 3 (SP3), the SQL Server query processor team adopted a policy that any hotfix that could potentially affect the execution plan of a query must be controlled by a trace flag. Except for fixes to bugs that can cause incorrect results or corruption, these hotfixes are turned off by default, and a trace flag is required to enable the fix. This policy change helps avoid unexpected changes to the execution plan that may occur when a hotfix or a security update is installed."

So if something in the QO has been deemed worthy of a hotfix, you still need to turn on a traceflag to get the benefit of that fix. 4199 is how you turn these things on. I’d be surprised if you found a situation where 4199 was detrimental.

This is my absolute favourite sequence generator. Employed here to find gaps in a primary key.

No idea about the plan though…

;with

digits (i) as(

select 1 as i

union all select 2

union all select 3

union all select 4

union all select 5

union all select 6

union all select 7

union all select 8

union all select 9

union all select 0

),

sequence (i) as (

select D1.i

+ (10*D2.i)

+ (100*D3.i)

+ (1000*D4.i)

+ (10000*D5.i)

+ (100000*D6.i)

from digits as D1,

digits as D2,

digits as D3,

digits as D4,

digits as D5,

digits as D6

)

select

(select top 1 admcdt from adm where admkey < i order by admkey desc) as before,

i as ‘missing adm’,

(select top 1 admcdt from adm where admkey > i order by admkey asc) as after

from sequence

where i between 1 and (select max(admkey) from adm)

and not exists (select 1 from adm where admkey=i)

order by i

All credit to — http://drsql.spaces.live.com/blog/cns!80677FB08B3162E4!1232.entry

Hi Nick,

Using ROW_NUMBER() is a better option for generating a table of numbers, as it realises it can stop if you only need a few dozen rows. Your version doesn’t get to leverage that. But actually, to find gaps, you don’t even need a table of numbers. Just use ROW_NUMBER(), and look for the times when the difference between the values with gaps and the rownum increases (indicating that things have been missed).

Hope this helps,

Rob

Hi Rob,

yes, you’re right of course.

Though one thing is to identify the extents (upper and lower limit) of gaps, another is to identify the actual missing keys within the gaps, which is what mine does.

I gave it a try with ROW_NUMBER, but before I got it working I suddenly also remembered the new LAG and LEAD functions. This is what I got working:

;with cte as

(

select

lag(key) over(order by key) as previous_key,

key as current_key

from tbl

)

select

previous_key as gap_start,

current_key as gap_end

from cte

where current_key-previous_key > 1

order by current_key

Hi Nick,

Yes, that method works very well for finding gaps too.

Rob