SQL 2012 was a big release for working out the median in SQL Server, with the advent of the function PERCENTILE_CONT(). It’s a very elegant way of working out the median (hint, that’s the 0.5 point), even though it’s not actually an aggregate function, as I’ve written before.

Plus – it doesn’t even perform well. About a year ago, Aaron Bertrand (@aaronbertrand) wrote a fantastic post about different methods for getting medians, and showed that PERCENTILE_CONT() is actually one of the slowest methods, and that the best method is to use an idea from Peter Larsson (@SwePeso) that uses an OFFSET-FETCH clause to grab the rows of interest before doing an average of them.

Except that the OFFSET-FETCH clause was also new in 2012. So if you’re stuck on SQL 2008 R2 and earlier, you’re a bit more stuck.

All the pre-SQL 2012 methods that Aaron showed used ROW_NUMBER() except one – which used a combination of MIN/MAX over each half of the data. But one method that Aaron didn’t explore in his post was to simulate OFFSET-FETCH in earlier versions. Let me show you…

Here’s the OFFSET-FETCH method. Notice that it fetches either 1 or 2 rows (depending on whether the overall count is 1 or 2), but offsets by just under half of the set.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

SELECT d.SalesPerson, w.Median FROM ( SELECT SalesPerson, COUNT(*) AS y FROM dbo.Sales GROUP BY SalesPerson ) AS d CROSS APPLY ( SELECT AVG(0E + Amount) FROM ( SELECT z.Amount FROM dbo.Sales AS z WHERE z.SalesPerson = d.SalesPerson ORDER BY z.Amount OFFSET (d.y - 1) / 2 ROWS FETCH NEXT 2 - d.y % 2 ROWS ONLY ) AS f ) AS w(Median); |

What my pre-2012-compatible version does is to fetch slightly MORE than the set first, and then get the top 1 or 2 but in DESC order.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

SELECT d.SalesPerson, w.Median FROM ( SELECT SalesPerson, COUNT(*) AS y FROM dbo.Sales GROUP BY SalesPerson ) AS d CROSS APPLY ( SELECT AVG(0E + Amount) FROM ( SELECT TOP (2 - d.y % 2) Amount FROM ( SELECT TOP (d.y / 2 + 1) z.Amount FROM dbo.Sales AS z WHERE z.SalesPerson = d.SalesPerson ORDER BY z.Amount ) AS t ORDER BY Amount DESC ) AS f ) AS w(Median); |

With OFFSET-FETCH, we’re grabbing the rows we want by skipping over the rows we’re not interested in until we find the ones that we are interested in. In the TOP/TOPDESC, we’re identifying the rows we want by the fact that they’re the bottom of the top slightly-more-than-half set.

Other than that, the idea is exactly the same. The results are identical, but what about the performance?

First, let’s give you the code to set up your environment (as found in Aaron’s post) – I used a clustered index.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

CREATE TABLE dbo.Sales(SalesPerson INT, Amount INT); GO --CREATE CLUSTERED INDEX x ON dbo.Sales(SalesPerson, Amount); --CREATE NONCLUSTERED INDEX x ON dbo.Sales(SalesPerson, Amount); --DROP INDEX x ON dbo.sales; ;WITH x AS ( SELECT TOP (100) number FROM master.dbo.spt_values GROUP BY number ) INSERT dbo.Sales WITH (TABLOCKX) (SalesPerson, Amount) SELECT x.number, ABS(CHECKSUM(NEWID())) % 99 FROM x CROSS JOIN x AS x2 CROSS JOIN x AS x3; |

What I want to do to evaluate this is to look at the query plans. Once I’ve done that, I’ll make a comment about the performance and where it fits into the mix from Aaron’s post.

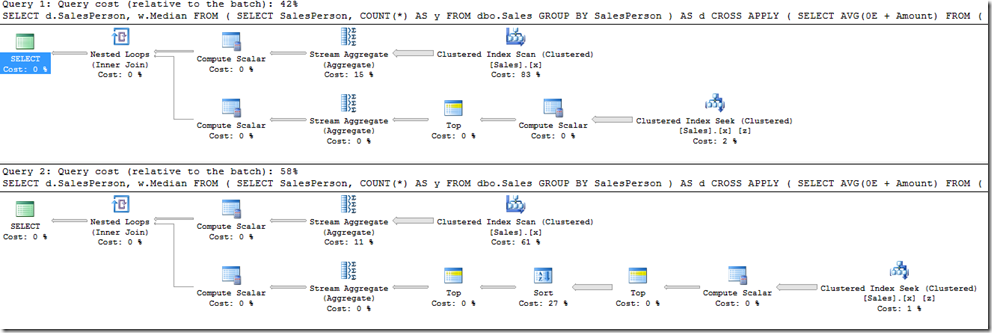

So those plans… OFFSET-FETCH method first, followed by the TOP/TOPDESC method. I’m using a clustered index on the data – a nonclustered index gives the same shape but with nonclustered index operations instead of clustered index operations. Heaps are a different story that I’m not exploring here.

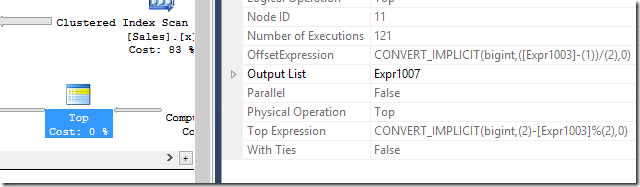

As you’d expect, there’s a lot of similarity. Both use Nested Loops, grabbing the Counts from a Scan on the outer branch, with a Seek on the inner branch. And both inner branches have a Top Operator pulling the data out of a Seek. But the TOP/TOPDESC method has TWO Top operators, with a Sort in between. This is because of the ‘TOPDESC’ bit. If we had a ‘Bottom’ operator, then that would avoid the need for a Sort, but no such animal exists, and it does ‘Bottom’ by doing a Top of re-Sorted data. It’s very disappointing. The Top operator in the OFFSET-FETCH method has a new property called OffsetExpression, which it uses to skip over as many rows as it needs – it’s simply not supported pre-2012.

(Quick side note: the arrow between the Compute Scalar and the right-most Top operator in both plans is quite thin – much thinner that you might expect. This is only a quirk of the plan because the Actuals haven’t been reported here. MSDN (https://technet.microsoft.com/en-us/library/ms178082.aspx) says: “Compute Scalar operators that appear in Showplans generated by SET STATISTICS XML might not contain the RunTimeInformation element. In graphical Showplans, Actual Rows, Actual Rebinds, and Actual Rewinds might be absent from the Properties window when the Include Actual Execution Plan option is selected in SQL Server Management Studio. When this occurs, it means that although these operators were used in the compiled query plan, their work was performed by other operators in the run-time query plan.” Therefore, the arrow coming out of the Compute Scalar operator is the width of the estimated number of rows, because it doesn’t have the actual number of rows. But it’s a Compute Scalar – it’s not going to change the number of rows, and you should consider the width of the arrow as being the width of the arrow going into it.)

Of course, this TOP/TOPDESC method is slower than OFFSET-FETCH. If we had a ‘Bottom’ operator, I think it wouldn’t be very much slower, but here we have a Sort operator! And those things are bad. The plans estimated that the cost of the Sort would be 27% of the total query, and that the ratio between the two queries would be 58:42, which is 1.38:1. But remember that those Cost percentages are based on estimated values, and we know those estimates are quite a long way out.

So instead, we use a more empirical method, which is to run them against each other.

On my machine (a Surface Pro 2), with a warm cache, the OFFSET-FETCH method took around 380ms, compared to around 570ms for the TOP/TOPDESC. It’s definitely slower – no surprises there. It’s a good 50% slower, if not more. But this still makes it faster than any of the pre-SQL 2012 versions that Aaron used.

I’m sure you’re going to point out that I’m clearly running this on a version of SQL Server that is at least 2012… so I ran it on a SQL 2008 R2 box as well, and found that the plan was identical as shown here, and that it was about 30% faster than the “2005_3” version from Aaron’s post with an index applied.

So if you’re using SQL 2008 R2 (or earlier) still, then don’t dismiss the best-performing median function from Aaron’s post (thanks again, Peso!), but instead, consider coming up with a 2008R2-compatible version, as I’ve done here.

Update: Another method is to consider simply filtering on ROW_NUMBER(), which isn’t included in Aaron’s post either. It’s still good, but doesn’t quite perform as quickly as the TOP/TOPDESC method on the million-row set, because it has to figure out the ROW_NUMBER() for a lot of rows. The OffsetExpression property in the Top operator of SQL 2012+ is your friend.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

SELECT d.SalesPerson, w.Median FROM ( SELECT SalesPerson, COUNT(*) AS y FROM dbo.Sales GROUP BY SalesPerson ) AS d CROSS APPLY ( SELECT AVG(0E + Amount) FROM ( SELECT z.Amount, rn = ROW_NUMBER() OVER (ORDER BY z.Amount) FROM dbo.Sales AS z WHERE z.SalesPerson = d.SalesPerson ) AS f WHERE f.rn BETWEEN (d.y + 1) / 2 AND (d.y + 2) / 2 ) AS w(Median); |

This Post Has 3 Comments

The median was a hot topic in SQL in the early 1990’S . There were two newsstand magazines for databases, DBMS and DATABASE PROGRAMMING & DESIGN. They started at two separate publishers, but thanks to mergers and buyout found themselves under CMP and they were merged into Intelligent Enterprise later. I wrote columns in both magazines. Chris Date got my old position when I moved to DBMS. He has collected his old columns into a series of books form Addison-Wesley.

Chris would write a column, then i would write a reply, much like the famous Fred Allen & Jack Benny “feud” on early radio (http://www.otrcat.com/jack-benny-and-fred-allen-feud-p-48618.html). We wanted to sell both magazines!

Chris and I both finished a column with an SQL puzzle or problem. The median became the hot topic in 1992 and 1993, after the SQL-92 standard came. Date proposed two different solutions for the median. His first solution was based on the fact that if you duplicate every row in a table, the median will stay the same. The duplication will guarantee that you always work with a table that has an even number of rows. The first version that appeared in his column was wrong and drew some mail from me and from others who had different solutions. Here is a corrected version of his first solution, with his famous Parts table.

CREATE VIEW Temp1

AS SELECT weight FROM Parts

UNION ALL

SELECT weight FROM Parts;

CREATE VIEW Temp2

AS SELECT weight

FROM Temp1

<= (SELECT COUNT(*)

FROM Temp1 AS T1

WHERE T1.weight >= Temp1.weight)

AND (SELECT COUNT(*) FROM Parts)

<= (SELECT COUNT(*)

FROM Temp1 AS T2

WHERE T2.weight <= Temp1.weight);

SELECT AVG(DISTINCT weight) AS median

FROM Temp2;

This involves the construction of a doubled table of values, which can be expensive in terms of both time and storage space. You will also find that this requires a good implementation of the SQL-92 standard that allows you to use a correlated scalar subquery in place of a scalar value expression. Most SQL implementations are not yet that sophisticated.

The use of AVG(DISTINCT x) is important because leaving it out would return the simple average instead of the median. Consider the set of weights (12, 17, 17, 14, 12, 19). The doubled table, Temp1, is then (12, 12, 12, 12, 14, 14, 17, 17, 17, 17, 19, 19). But because of the duplicated values, Temp2 becomes (14, 14, 17, 17, 17, 17), not just (14, 17). The simple average is (96 / 6.0) = 16; it should be (31 / 2.0) = 15.5 instead.

My first solution involved a slight modification of Date’s solution to avoid the use of a doubled table, but it depends on a SQL-92 implementation that has a CEILING() function or particular vendor of rounding and truncation.

SELECT MIN(weight) — smallest value in upper half

FROM Parts

WHERE weight IN (SELECT P1.weight

FROM Parts AS P1, Parts AS P2

WHERE P2.weight >= P1.weight

GROUP BY P1.weight

HAVING COUNT(*) <=

(SELECT CEILING(COUNT(*) / 2.0)

FROM Parts))

UNION

SELECT MAX(weight) — largest value in lower half

FROM Parts

WHERE weight IN (SELECT P1.weight

FROM Parts AS P1, Parts AS P2

WHERE P2.weight <= P1.weight

HAVING COUNT(*) <=

(SELECT CEILING(COUNT(*) / 2.0)

FROM Parts));

or using the same idea and a CASE expression:

FROM (SELECT MAX(weight)

FROM Parts AS B1

WHERE (SELECT COUNT(*) + 1

FROM Parts

WHERE weight < B1.weight)

<= (SELECT CEILING (COUNT(*)/2.0)

FROM Parts)

UNION ALL

SELECT MAX(weight)

FROM Parts AS B

WHERE (SELECT COUNT(*) + 1

FROM Parts

WHERE weight < B.weight)

<= CASE (SELECT MOD (COUNT(*), 2)

FROM Parts)

WHEN 0

THEN (SELECT CEILING (COUNT(*)/2.0) + 1

FROM Parts)

ELSE (SELECT CEILING (COUNT(*)/2.0)

FROM Parts)

END) AS Medians(weight);

At this point, we got more and more answers. And the code got more and more complicated. If you want to look at it, it is in Chapter 23 of the Third Edition of SQL FOR SMARTIES. I would not use any of them today, but I have a discussion and a detailed explanation of how I improved my code.

Date’s second solution in 1995 was based on Celko’s median, folded into one query where I had used VIEWs for readability (CTE did not exist yet).

SELECT AVG(DISTINCT Parts.weight) AS median

FROM Parts

WHERE Parts.weight IN

(SELECT MIN(weight)

FROM Parts

WHERE Parts.weight IN

(SELECT P2.weight

FROM Parts AS P1, Parts AS P2

WHERE P2.weight <= P1.weight

GROUP BY P2.weight

HAVING COUNT(*)

<= (SELECT CEILING(COUNT(*) / 2.0)

FROM Parts))

UNION

SELECT MAX(weight)

FROM Parts

WHERE Parts.weight IN

(SELECT P2.weight

FROM Parts AS P1, Parts AS P2

WHERE P2.weight >= P1.weight

GROUP BY P2.weight

HAVING COUNT(*)

<= (SELECT CEILING(COUNT(*) / 2.0)

FROM Parts)));

Date mentions that this solution will return a NULL for an empty table and that it assumes there are no NULLs in the column. If there are NULLs, the WHERE clauses should be modified to remove them.

Rory Murchison was a regular contributor to puzzle answers. He came up with the idea of concatenating the key to each alue to make sure that every value is seen as a unique entity. Selecting the middle values is then a special case of finding the n-th item in the table.

My Second Median used a working table with the values and a tally of their occurrences from the

original table. This working table should be quite a bit smaller than the original table, and very fast to construct if there is an index on the target column. An awful self-join then gave you the counts of rows before and after each row’s value. Finding the middle was easy.

— construct table of cumulative tallies

CREATE TABLE Summary

(weight REAL NOT NULL,

occurs INTEGER NOT NULL, — number of occurrences

pretally INTEGER NOT NULL, — cumulative tally before

posttally INTEGER NOT NULL); — cumulative tally after

SELECT AVG(S3.weight) AS median

FROM Summary AS S3

WHERE (S3.posttally > (SELECT MAX(posttally) / 2.0 FROM Summary)

AND S3.pretally < (SELECT MAX(posttally) / 2.0 FROM Summary))

OR S3.pretally = (SELECT MAX(posttally) / 2.0 FROM Summary)

OR S3.posttally = (SELECT MAX(posttally) / 2.0 FROM Summary);

A simple median technique based on all of these methods was proposed by Philip Vaughan of San Jose, CA. It derives a VIEW with unique weights and number of occurrences and then a VIEW of the middle weights.

Anatoly Abramovich, Yelena Alexandrova, and Eugene Birger presented a series of articles in SQL Forum magazine on computing the median (SQL Forum 1993, 1994). They define a characteristic

function, which they call delta, using the SIGN() function. The delta or characteristic function accepts a Boolean expression as an argument and returns one if it is TRUE and zero if it is FALSE or UNKNOWN. In SQL-92 we have a CASE expression, which can be used to construct the delta function. CASE was new to SQL-92.

The authors also distinguish between the statistical median, whose value must be a member of the set, and the financial median, whose value is the average of the middle two members of the set. A statistical median exists when there is an odd number of items in the set. If there is an even number of items, you

must decide if you want to use the highest value in the lower half (they call this the left median) or the lowest value in the upper half (they call this the right median).

The left statistical median of a unique column can be found with this query, if you will assume that we have a column called bin which represents the storage location of a part.

SELECT P1.bin

FROM Parts AS P1, Parts AS P2

GROUP BY P1.bin

HAVING SUM(CASE WHEN (P2.bin <= P1.bin) THEN 1 ELSE 0 END)

= (COUNT(*) / 2.0);

Changing the direction of the theta test in the HAVING clause will allow you to pick the right statistical median Getting the other medians involves playing with this self-join query and the CASE expressions.

The statistical median of a column with duplicate values can be found with a query based on the same ideas, but you have to adjust the HAVING clause to allow for overlap.

My Third Median

Another approach made easier with SQL-92 involves looking at a picture of a line of sorted values and seeing where the median would fall. Every value in column weight of the table partitions the table into three sections, the values which are less than weight, equal to weight or greater than weight. We can get a profile of each value with a tabular subquery expression.

Now the question is how to define a median in terms of the partitions. Clearly, the definition of a median means that if (lesser = greater) then weight is the median.

SELECT AVG(DISTINCT weight)

FROM (SELECT P1.pno, P1.weight,

SUM(CASE WHEN P2.weight < P1.weight

SUM(CASE WHEN P2.weight = P1.weight

THEN 1 ELSE 0 END),

SUM(CASE WHEN P2.weight > P1.weight

THEN 1 ELSE 0 END)

FROM Parts AS P1, Parts AS P2

GROUP BY P1.pno, P1.weight)

AS Partitions (pno, weight, lesser, equal, greater)

WHERE lesser = greater

OR (lesser <= (SELECT COUNT(*) FROM Parts)/2.0

AND greater <= (SELECT COUNT(*) FROM Parts)/2.0);

It is also worth noting that you can use either AVG(DISTINCT i) or AVG(i) in the SELECT clause. The AVG(DISTINCT i) will return the usual median when there are two values. This happens when you have an even number of rows and a partition in the middle, such as (1,2,2, 3, 3, 3) which has (2, 3) in the middle, which gives us 2.5 for the median. The AVG(i) will return the weighted median instead. This happens when you also factor in the number of times the two values in the middle of a table with an even number of rows. The table with (1,2,2, 3, 3, 3) would return (2,2, 3, 3, 3) in the middle, which gives us 2.6 for the weighted median. The weighted median is a more accurate description of the data.

I sent this attempt to Richard Romley, who made it quite a bit simpler: , but let me take you thru the steps so you can see the reasoning.

Look at the WHERE clause. It could use some algebra and since it deals only with aggregate functions and scalar subqueries, you could move it into a HAVING clause. Moving things from the WHERE clause into the HAVING clause in a grouped query is important for performance, but it is not always possible.

SELECT AVG(DISTINCT weight)

FROM (SELECT P1.weight

FROM Parts AS P1, Parts AS P2

GROUP BY P1.pno, P1.weight

THEN 1 ELSE 0 END)

>= ABS(SUM(CASE WHEN P2.weight < P1.weight THEN 1

WHEN P2.weight > P1.weight THEN -1

ELSE 0 END)))

AS Partitions;

If you prefer to use functions instead of a CASE expression, then use this version of the query:

SELECT AVG(DISTINCT weight)

FROM (SELECT P1.weight

FROM Parts AS P1, Parts AS P2

GROUP BY P1.pno, P1.weight

HAVING SUM(ABS(1 – SIGN(P1.weight – P2.weight))

>= ABS(SUM(SIGN (P1.weight – P2.weight)))

AS Partitions;

Thank you Joe – terrific fun to read this.

Joe, please run your versions against the others using Aaron Bertrand’s 1M row test harness and report back on their performance.