I spent some time explaining SQL Server Replication to someone recently. They said they hadn’t ever really understood the concepts, and that I’d managed to help. It’s inspired me to write a post that I wouldn’t normally do – a “101” post. I’m not trying to do a fully comprehensive piece on replication, just enough to be able to help you get the concepts.

The way I like to think about replication is by comparing it to magazines. The analogy only goes so far, but let’s see how we go.

The things being replicated are articles. A publication (the responsibility of a publisher) is a collection of these articles. At the other end of the process are people with subscriptions. It’s just like when my son got a magazine subscription last Christmas. Every month, the latest set of articles got delivered to our house.  (The image here isn’t my own – but feel free to click on it and subscribe to FourFourTwo – excellent magazine, particularly when they’re doing an article about the Arsenal.) Most of the work is done by agents, such as the newsagent that gets it to my house.

(The image here isn’t my own – but feel free to click on it and subscribe to FourFourTwo – excellent magazine, particularly when they’re doing an article about the Arsenal.) Most of the work is done by agents, such as the newsagent that gets it to my house.

In SQL Server, these same concepts hold. The objects which are being replicated are articles (typically tables, but also stored procedures, functions, view definitions, and even indexed views). You might not replicate your whole database – just the tables and other objects of interest. These articles make up a publication. Replication is just about getting that stuff to the Subscribers.

Of course, the magazine analogy breaks down quite quickly. Each time my son got a new edition, the articles were brand new – material he’d never seen before. In SQL Replication, the Subscribers probably have data from earlier. But this brings us to look at a key concept in SQL Replication – how the stupid thing starts.

Regardless of what kind of replication you’re talking about, the concept is all about keeping Subscribers in sync with the Publisher. You could have the whole table move across every time, but more than likely, you’re going to just have the changes go through. At some point, though, the thing has to get to a starting point.

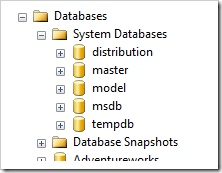

This starting point is (typically) done using a snapshot. It’s not a “Database Snapshot” like what you see in the Object Explorer of SQL Server Management Studio – this is just a starting point for replication. It’s a dump of all the data and metadata that make up the articles, and it’s stored on the file system. Not in a database, on the file system. A Subscriber will need this data to be initialised, ready for a stream of changes to be applied.

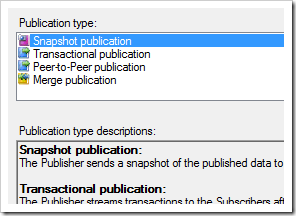

It’s worth noting that there is a flavour of replication which just uses snapshots, known as Snapshot Replication. Every time the subscriber gets a refresh of data, it’s the whole publication that has to flow down. This might be fine for small pieces of data, it might not for others.

(There are other ways to get started too, such as by restoring a backup, but you should still be familiar with the concept of snapshots for replication.)

To get in sync, a subscriber would need the data in the snapshot for initialisation, and then every change that has happened since. To reduce the effort that would be required if something went drastically wrong and a new subscription became needed, snapshots can be recreated at regular intervals. This is done by the Snapshot Agent, and like all agents, can be found as a SQL Server Agent job.

The middle-man between the Publisher and the Subscribers is the Distributor. The Distributor is essentially a bunch of configuration items (and as we’ll see later, changes to articles), stored in the distribution database – a system database that is often overlooked.  If you query sys.databases on a SQL instance that has been configured as a Distributor you’ll see a row for the distribution database. It won’t have a database_id less than 5, but it will have a value of 1 in the is_distributor column. The instance used as the Distributor is the one whose SQL Server Agent runs most of the replication agents, including the Snapshot Agent.

If you query sys.databases on a SQL instance that has been configured as a Distributor you’ll see a row for the distribution database. It won’t have a database_id less than 5, but it will have a value of 1 in the is_distributor column. The instance used as the Distributor is the one whose SQL Server Agent runs most of the replication agents, including the Snapshot Agent.

If you’re not doing Snapshot Replication, you’re going to want to get those changes through. Transactional Replication, as the name suggests, involves getting transactions that affect the published articles out to the subscribers. If the replication has been set up to push the data through, this should be quite low latency.

So that SQL Server isn’t having to check every transaction right in the middle of it, there’s a separate agent that looks though the log for transactions that are needed for the replication, copying them across to the distribution database, where they hang around as long as they’re needed. This agent is the Log Reader Agent, and also runs on the Distributor. You can imagine that there is a potential performance hit if this is running on a different machine to the Publisher, and this is one of the influencing factors that means that you’ll typically have the Distributor running on the Publisher (although there are various reasons why you might not).

Now we have a process which is making sure that initialisation is possible by getting snapshots ready, and another process which is looking for changes to the articles. The agent that gets this data out to Subscribers is the Distribution Agent. Despite its name, it can run at the Subscriber, if the Subscriber is set to pull data across (good for occasionally connected systems). This is like with my magazine – I might prefer to go to the newsagent and pick it up, if I’m not likely to be home when the postman comes around. In effect, my role as Subscriber includes doing some distribution if I want to pull the data through myself.

These three agents, Snapshot Agent, Log Reader Agent and Distribution Agent, make up the main agents used for Transactional Replication, which is probably the most common type of replication around. Snapshot Replication doesn’t use the Log Reader Agent, but still needs the other two.

Now let’s consider the other types of replication.

Merge Replication involves having subscribers that can also change the data. It’s similar to Transactional Replication with Updateable Subscribers, which has been deprecated. These changes are sent back to the Merge Agent, which works out what changes have to be applied. This is actually more complicated than you might expect, because it’s very possible to have changes made in multiple places and for a conflict to arise. You can set defaults about who wins, and can override manually through the Replication Monitor (which is generally a useful tool for seeing if Subscribers are sufficiently in sync, testing the latency, and so on). Updateable Subscribers end up using the Queue Reader Agent instead of the Merge Agent. They’re slightly different in the way they run, but I consider them to be quite similar in function, as they both involve getting the data back into the publisher when changes have been made elsewhere.

Merge Replication involves having subscribers that can also change the data. It’s similar to Transactional Replication with Updateable Subscribers, which has been deprecated. These changes are sent back to the Merge Agent, which works out what changes have to be applied. This is actually more complicated than you might expect, because it’s very possible to have changes made in multiple places and for a conflict to arise. You can set defaults about who wins, and can override manually through the Replication Monitor (which is generally a useful tool for seeing if Subscribers are sufficiently in sync, testing the latency, and so on). Updateable Subscribers end up using the Queue Reader Agent instead of the Merge Agent. They’re slightly different in the way they run, but I consider them to be quite similar in function, as they both involve getting the data back into the publisher when changes have been made elsewhere.

Peer-to-Peer Replication is the final kind. This is really a special type of Transactional Replication, in which you have multiple publishers, all pushing data out at each other. It’s the option that is considered closest to a High Availability system, and is good across geographically wide environments, particularly if connections are typically routed to the closest server. Consider the example of servers in the UK, the US and Australia. Australian users can be connected to the local server, knowing the changes are going to be pushed out to the UK and US boxes. They’re set up in a topology, with each server considered a node. Each server keeps track of which updates it’s had, which means they should be able to keep in sync, regardless of when they have downtime. If Australian changes are sent to the UK but not the US, then US can be updated by the UK server if that’s easier.

Replication can feel complex. There are a lot of concepts that are quite alien to most database administrators. However, the benefits of replication can be significant, and are worth taking advantage of in many situations. They’re an excellent way of keeping data in sync across a number of servers, without many of the server availability hassles associated with log-shipping or mirroring. It can definitely help you achieve scale-out environments, particularly if you consider Peer-to-Peer, which can help you offload your connections to other servers, knowing the key data can be kept up-to-date easily.

I haven’t tried to be completely comprehensive in this quick overview of replication, but if you’re new to the concepts, or you’re studying for one of the MCITP exams and need to be able to get enough of an understanding to get you by, then I hope this has helped demystify it somewhat.

There’s more in SQL Books Online, of course – a whole section on Replication. If what I’ve written makes sense, go exploring, and try to get it running on your own servers too. Maybe my next post will cover some of that.

This Post Has 17 Comments

SQL Server Central has a StairWay tutorial on replication, you might want to point that out people also.

http://www.sqlservercentral.com/stairway/72401/

~Kalen

Thanks Kalen.

Readers: Sebastian Meine has written a series on replication that you can see over at SQLServerCentral.com. Check out the link in Kalen’s comment for more.

Hi Rob,

There is absolutely nothing wrong with a 101 level article. I think the thing that confuses people the most with replication is the fact that there is only so much you can get out of management studio, when things go awry. The other main stumbling block is with conflicts encountered with merge replication, particularly when identities are used to seed primary keys, Hillary Cotter’s "Identity crisis" article which can be found on simple-talk is an excellant reference for dealing with this.

I hope you and your family are having a happy Christmas.

Chris

Yes, I think there are a number of things which people struggle with around replication, and hopefully this can help people grasp the basic concepts. Maybe I’ll do a post on conflict resolution soon.

I Rob, I agree with you: SQL Replication is nothing to worry about.

On the other hand, I think it’s quite complex to implement in a big production environment. I’ve got some experience of Transactional Replication but, apart from the key concepts, all I know about it I’ve learned on the job (mistakes included of course).

Can you suggest any good advanced self-training book about SQL replications?

Thanks

Hi Francesco – I’ve never read a book on replication, so I can’t really recommend one. Sorry.

Bad luck. Someone should start thinking of writing a good book about that subject.

For more SQL replication info checkout Paul Ibison’s excellent site: http://www.replicationanswers.com

Hilary Cotter’s SQL 2000 replication book was invaluable to me, a possible update required?

Rgds,

Scott

@Francesco – There’s an excellent chapter, by Hilary Cotter in the first SQL Server MVP Deep Dives book, that looks at performance tuning Transactional Replication topologies. It contains the best (IMHO) explanation of how to tune the performance of the various Replication agents. (http://www.manning.com/nielsen/)

For general replication performance and tuning knowledge, see the REPLTLK blog on MSDN (http://blogs.msdn.com/b/repltalk/)

There are some replication performance tuning guides out there too, buried in various blogs however, I don’t have the references to hand. I’ll see what I can dig up….

Thanks @Scott & @John.

I’ve never read that book but I knew already it was a really good one 🙂

about guides, I’ve found this quite usefull "Achieving Excellence in Designing and Maintaining SQL Server Transactional Replication Environments" (http://technet.microsoft.com/en-us/library/jj215849.aspx)

This one is good for beginners to intermediate: Pro SQL Server 2008 Replication (http://www.amazon.com/Server-2008-Replication-Experts-Voice/dp/143021807X)

thanks all for the great info.

hope this is the place for my question.

should peer to peer transactional replication ( with updates from peers enabled ) withstand network disconnects easily ?

we are using it in an application, set up sqlprime as the primary and sqlsecond as the failover server

all goes well until we test the redundancy by disconnecting the network on sqlprime .

the app find the failover, writes data but when sqlprime ( primary server) comes back, all replication is stopped with strange error ( mainly directing to login failures ( which are bogus as passwords did not change. )

so , are we testing something this setup should not stand ? ( network interruptions ? )

thanks

dave.

Hi Dave,

It should be able to resume. It will be helpful to see the actual errors.

Maybe drop me an email and I’ll see what I can do. rob at lobsterpot.com.au is best.

Rob

i have 3 Server using Sql Server 2008 R2 Standard edition located in 3 different sites and didnt use domain. i want to replicate each transaction from these server to my HQ server that also using Sql Server 2008 R2 standard.

my question is, is it possible to replicate in non-domain environment?

if the answer is yes, how the server can communicate in different network as the replication cant use Ip Address as their communication?

how do I set up peer to peer replication for 2012

do I do a snapshot or just go to peer to peer

How do I determine the agent for a specific replication job?